By Louise Gray, Environment Correspondent

Professor Michael Mann plotted a graph in the late 1990s that showed global temperatures for the last 1,000 years. It showed a sharp rise in temperature over the last 100 years as man made carbon emissions also increased, creating the shape of a hockey stick.

The graph was used by Al Gore in his film ‘An Inconvenient Truth’ and was cited by the United Nations body the Intergovernmental Panel on Climate Change (IPCC) as evidence of the link between fossil fuel use and global warming.

But the graph was questioned by sceptics who pointed out that is it impossible to know for certain the global temperature going back beyond modern times because there were no accurate readings.

The issue became a central argument in the climate change debate and was dragged into the ‘climategate’ scandal, as the sceptics accused Prof Mann and his supporters of exaggerating the extent of global warming.

However, speaking to the BBC recently, Prof Mann, a climatologist at Pennsylvania State University, said he had always made clear there were “uncertainties” in his work.

“I always thought it was somewhat misplaced to make it a central icon of the climate change debate,” he said.

In a BBC Panorama programme, scientists from both sides of the debate agree that global warming is happening and it is at least partly caused by mankind.

But they differ on how much the recent rise in temperature has been caused by man made emissions and what will happen in the future.

Professor John Christy, an atmospheric scientist from the University of Huntsville in Alabama, said just a quarter of the current warming is caused by man made emissions. He said that 10 to 30 per cent of scientists agree with him and are fairly sceptical about the extent of man made global warming.

However Prof Bob Watson, a UK Government adviser on climate change, said even if severe global warming is not certain it is worth preparing for the higher temperature projections.

“What risks are we willing to take? The average homeowner probably has fire insurance. They don’t expect a fire in their home [but] they are still willing to take our fire insurance because they don’t want the risk and there’s probably a much better chance of us seeing the middle to upper end of that temperature projection than a single person saying they’ll have a fire in their home tomorrow morning,” he said.

See post here. See video interview here.

By Dell Hill

Update: Word is that the Jones Act was waived today, finally, over 10 weeks after the explosion, ships can begin to head for the Gulf to do the clean up job they were designed to do

Gotta Protect Those Unions, Ya Know!

It’s absolutely unbelievable!

There’s a ship tied up in Norfolk, Virginia. It’s an oil-skimmer, designed specifically to clean up messes such as the one now in its 66th day in the Gulf of Mexico. And there it sits, bobbing like a cork on the Atlantic Ocean waters in VIRGINIA.

Someone call the White House and alert them to the fact that the oil leak is in the Gulf, not the Atlantic, and there’s absolutely NO reason on Earth for that ship to be sitting in Virginia, doing nothing.

Peter Frost, writing for the Daily Press, files this amazing report.

“After making a brief stop in Norfolk for refueling, U.S. Coast Guard inspections and an all-out publicity blitz intended to drum up public support, a giant tanker billed as the world’s largest oil skimming vessel set sail Friday for the Gulf of Mexico where it hopes to assist in the oil-cleanup effort.

The Taiwanese-owned, Liberian-flagged ship dubbed the “A Whale” stands 10 stories high, stretches 1,115 feet in length and has a nearly 200-foot beam. It displaces more water than an aircraft carrier.

Built in South Korea as a supertanker for transporting oil and iron ore, the six-month-old vessel was refitted in the wake of the BP oil spill with 12, 16-foot-long intake vents on the sides of its bow designed to skim oil off surface waters.

The vessel’s billionaire owner, Nobu Su, the CEO of Taiwanese shipping company TMT Group, said the ship would float across the Gulf “like a lawn mower cutting the grass,” ingesting up to 500,000 barrels of oil-contaminated water a day.

But a number of hurdles stand in his way. TMT officials said the company does not yet have government approval to assist in the cleanup or a contract with BP to perform the work.

That’s part of the reason the ship was tied to pier at the Virginia Port Authority’s Norfolk International Terminals Friday morning. TMT and its public-relations agency invited scores of media, elected officials and maritime industry executives to an hour-long presentation about how the ship could provide an immediate boost to clean-up efforts in the Gulf.

TMT also paid to fly in Edward Overton, a professor emeritus of environmental sciences at Louisiana State University, to get a look at the massive skimmer.

Overton blasted BP and the federal government for a lack of effort and coordination in their dual oil-spill response and made a plea to the government to allow the A Whale to join the cleanup operation.

“We need this ship. We need this help,” Overton said. “That oil is already contaminating our shoreline. We’ve got to get the ship out there and see if it works. There’s only one way to find out: Get the damn thing in the gulf and we’ll see.”

TMT officials acknowledged that not even they’re sure how well the new skimming method will work, noting that it appeared to perform well in limited testing last week.

“This concept has never been tried before,” said Bob Grantham, a TMT project officer. “But we think we can do in maybe in a day and a half what these other crews have done in 66 days. We see the A Whale as adding another layer to the recovery effort.”

Virginia Transportation Secretary Sean T. Connaughton said the McDonnell administration “still has great interest in offshore oil development in Virginia” and supports the A Whale’s effort to assist in the cleanup.

To join the fight, the ship also might require separate waivers from the Coast Guard and the U.S. Environmental Protection Agency.

The A Whale - pronounced along the lines of “A Team” because there is a “B Whale” coming - is designed to work 20 to 50 miles offshore where smaller skimmers have trouble navigating. The ship would take in oily water and transfer it into specialized storage tanks on the flanks of the vessel. From there, the oil-fouled seawater would be pumped into internal tanks where the oil would separate naturally from the water.

After the separation process, the oil would be transferred to other tankers or shore-based facilities while the remaining water would be pumped back into the gulf.

Because the process wouldn’t remove all traces of oil from the seawater, TMT will likely have to gain a special permit from the EPA, said Scott H. Segal of the Washington lobbying firm, Bracewell & Giuliani, which TMT has retained to help negotiate with federal regulators.

“The simple answer is, we don’t know what the discharge will look like until we can take A Whale out there and test it,” Segal said. TMT will work with regulators to determine an appropriate level of oil that can be contained in the ship’s discharge.

TMT also is firm is working with the Coast Guard to gain approval to operate in the gulf, which may require a waiver from a 90-year-old maritime act that restricts foreign-flagged vessels from operating in U.S. waters, said Bob Grantham, a TMT project officer.

Connaughton, the former federal Maritime Administrator, said he doesn’t believe the A Whale would require a waiver from the Jones Act, a federal law signed in 1920 that sought to protect U.S. maritime interests.

Coast Guard inspectors toured the ship for about four hours on Thursday to determine the ship’s efficacy and whether it was fit to be deployed, said Capt. Matthew Sisson, commanding officer of the Coast Guard’s Research and Development arm in New London, Conn.

“We take all offers of alternative technology very seriously,” Sisson said. The ship, he said, is “an impressive engineering feat.”

He would not offer a timetable for Coast Guard approval of the vessel, but said he will try to “turn around a report...as soon as humanely possible.”

Of course, even if the ship gains approval to operate in the gulf, its owners expect the company to be paid for its efforts.

“That’s an open question,” Segal said. “Obviously, (TMT) is a going concern and its people would need to be compensated for their time and effort.”

It makes absolutely NO difference who pays the bill. It makes absolutely NO difference whether or not there are enough life preservers on board. It makes absolutely NO sense to have this ship parked at Norfolk, Virginia while millions of gallons of crude oil is still pumping into the Gulf daily.

He told us it would be “Hope and Change”. Is this the “change”? God, I “Hope” not!

See Giant Cleanup Ship Met with Puny Response from Bureaucrats here.

Although the cornerstone of the “greenhouse effect” is so-called “back-radiation” from “greenhouse gases” in the atmosphere, the foundation stone is the Stefan-Boltzmann law. The reason for all the quotation marks in the sentence is because without a correct application of the law, all those principles are without basis, and so are worthless.

From: Earth’s Annual Global Mean Energy Budget, J. T. Kiehl and Kevin E. Trenberth, February 1997, Bulletin of the American Meteorological Society 78, 197-208 (see)

“For the outgoing fluxes, the surface infrared radiation of 390 W sq.m corresponds to a blackbody emission at 15C”

At face value, this looks fine, except it assumes an ideal radiator, and radiation into surroundings at absolute zero (-273C). The Stefan-Boltzmann law defines the radiation of a body as proportional to the 4th. power of the absolute temperature, multiplied by the emissivity (1 for an ideal radiator) for radiation into a vacuum. (see ) However, the earth’s surface is not radiating into a vacuum, but into the atmosphere. For radiation into surroundings above absolute zero, the radiation is proportional to the absolute difference between the fourth powers of the two temperatures. This implies that if the temperature difference is zero or negative, there is no outgoing radiation. This is not surprising; a cooler body cannot heat a hotter body, neither can two bodies at the same temperature exchange (have a net transfer of) heat or infrared radiation. These effects would violate the Second Law of Thermodynamics. The linked page has a calculator; emitter and surroundings temperatures and emissivity can be input to calculate radiation in Watts per sq.metre if the area of the emitter is set to 1. Given a surface temperature of 15C with emissivity 0.9 and an atmosphere at 10C, the surface would emit 23.79 W sq.m. This is far from the figures quoted on the “energy balance” diagrams of 390 or 396 W sq.m. In any case, the cooler atmosphere can’t possibly heat the hotter surface.The Stefan-Boltzmann law doesn’t allow radiative flux in the “negative” direction. A straight calculation using the emitter/surroundings temperatures gives a negative result!

Kiehl and Trenberth (and others) back-calculate the temperature of the top-of-atmosphere (TOA) from the measured (by satellite) outgoing infrared radiation of 235 W sq.m as -19C, assuming an ideal emissivity of 1. They then conclude that the surface would emit the same without an atmosphere despite the surface having a smaller area, and neither surface nor atmosphere having the ideal emissivity (likely to be more like 0.9 for land/water), and even then, not identical emissivities. The TOA isn’t even a surface, but a layer. I can find no quoted figure for the emissivity at TOA, so I have no idea what the correctly calculated temperature at TOA might be. The assumed surface temperature of -19C “without greenhouse effect” has to be wrong in any case. The Ideal Gas Law can be used to calculate the air temperature at the surface, assuming a nitrogen/oxygen/water-vapour atmosphere at a pressure of 1 bar. This gives about 15C with no requirement for any input from the sun. A calculation for the atmosphere of Venus (surface pressure 93 bar) gives a result close to the observed surface temperature. The difference can be explained by the unique atmospheric conditions on the planet; there is no need for any “greenhouse” effect calculations. Indeed, with an atmosphere similar to that on Earth, the calculated temperature is around 200C hotter.

The “greenhouse effect” is a failed hypothesis; if its proponents employed real physics instead of misapplied laws and bad assumptions it would fall apart. The panes of the greenhouse are cracked; those that aren’t cracked are missing.

Ernest Rutherford: “If your experiment needs statistics, you ought to have done a better experiment.”

top of atmosphere (from NASA Data Glossary):

a given altitude where air becomes so thin that atmospheric pressure or mass becomes negligible. TOA is mainly used to help mathematically quantify Earth science parameters because it serves as an upper limit on where physical and chemical interactions may occur with molecules in the atmosphere. The actual altitude used for calculations varies depending on what parameter or specification is being analyzed. For example, in radiation budget, TOA is considered 20 km because above that altitude the optical mass of the atmosphere is negligible. For spacecraft re-entry, TOA is rather arbitrarily defined as 400,000 ft (about 120 km). This is where the drag of the atmosphere starts to become really noticeable. In meteorology, a pressure of 0.1 mb is used to define this location. The actual altitude where this pressure occurs varies depending on solar activity and other factors.

See post here. See more here. See reality check here. Also:

It appears that if any “greenhouse effect” occurs due to CO2 in our atmosphere, that effect is very small compared to the 3-dimensional effects of distributed heat with convection heat transfer. That this is so has long been known by NASA, which nonetheless has played a very major role in the promotion of AGW alarmism on the basis of greenhouse gases! Once again, we find that government is not worthy of our trust.

Let us return to the much simpler case of the moon. It has no atmosphere, no vegetation, and no oceans to muck things up. There is a paper by Martin Hertzberg, Hans Schreuder, and Alan Siddons called A Greenhouse Effect on the Moon?, which Dr. Hertzberg was kind enough to draw my attention to in early June and I have had in mind discussing its very important results ever since.

-- Charles R. Anderson, Ph.D. in this blog He also followed up with this blogpost.

By Dr. Anthony Lupo

The early edition paper by Anderegg et al. (2010) [1] in the Proceedings of the National Academy of Sciences on June 22nd will get a lot of attention from the media for pointing out that not all experts on climate change are equal. The title of the paper is Expert Credibility in Climate Change. The authors show using statistics that the ‘top researchers who are convinced by the evidence (CE) of anthropogenic climate change (ACC) have much stronger expertise in climate science than those top researchers who are unconvinced by the evidence (UE)’ [1].

Of the four authors on the paper, only Professor S.H. Schneider appears on their list of climate experts, and is likely the driving force for this paper. He is the same scientist who was warning us about catastrophic global cooling in the late 1970s. He is also one of those who believe that CE scientists need to be more aggressive in pressing the case of ACC to a public that remains unconvinced. In fact the public has grown more skeptical of ACC in the wake of the East Anglia e-mail scandal and the fudging on the dates of arctic sea ice loss and glacier loss in the Himalaya Mountains.

This study is a sign of just how desperate warmers are to capture the public’s attention and approval. They imply that policy makers should listen to only the credible experts (as defined by this study?). The study also leads to the implication that information on climate change should be controlled by credible experts, again, as defined by this study?

The study ultimately points out rightly that there are problems with the methodology that might make the list less than comprehensive. They also correctly imply that their methodology is subjective. They also state that ‘publication and citation are not perfect indicators of researcher credibility’ [1]. They even find that their conclusions may have biases that cannot be dealt with such as self citation and clique citation. None of these shortcomings will be discussed by those using the article as support for their position.

The latter could be a major problem as there are cultural pressures that could make clique citation and biases problematic. For example, in the late 1500’s there were religious pressures on scientists making the acceptance of Copernicus’s ideas dangerous, especially in the public arena. In more modern times, I would bet that a similar study to Anderegg et al. would have shown similar results in the literature against the acceptance of Darwin in the mid-to-late 1800s, or the against the acceptance of plate tectonics and Milankovitch’s orbital parameter theories in the 20th century. In modern times, these cultural pressures could be issues such the pressure to get funded or politics, the latter which unfortunately has become intertwined with the climate change debate.

Additionally, the Anderegg et al study seems to be a case study for introductory statistics classes. In these classes we are cautioned that “if you torture the data long enough it will confess”. Otherwise, the paper does not deserve any attention, but unfortunately it is getting a lot of ink.

Finally, the publication has generated some excitement because it has resulted in lists and rankings of climate researchers, and the one getting the most attention is a list of 496 “climate deniers” [2], which has been called a “blacklist”. This is the list of UE experts whose credentials were found not to be on par with those of the CE community. Scientists are generally reticent to talk about themselves, but one might ask those of us on that list (and I have been asked many times) how can you be a skeptic in the face of overwhelming consensus among the scientific community?

Consensus should not determine one’s position on any scientific matter, only the weight of the evidence gathered from self-examination should be considered. The evidence suggests that the climate changes of the last 150 years are not unusual when comparing to our best reckoning of the last 2000 years [3], [4], and the further back in time one goes, the less unusual the current climate changes seem [5]. There are many things that we still do not understand about climate and climate change, and new information and ideas are constantly coming to light [6]. Lastly, there are problems with the model forecasts that are well-documented and make the projection of future climate change difficult [7], [8]. These “forecasts” are scenarios, not predictions, there is a big difference.

These are but some of the arguments that lead to my own skepticism that ACC is a major driver of changes we’re seeing in climate now. There are literally thousands of publications out there that support these points. If you ask any skeptic on the “climate deniers” list, they would agree with me. Many of these people I have come to know through their writings or by personal contact, and they are top-notch scientists who support their position with integrity and passion. Many of them are brave and stand on their principles in spite of the “overwhelming consensus” brought to us by [1]. I am honored to be on the same list with them. See PDF with references here.

UK Telegraph

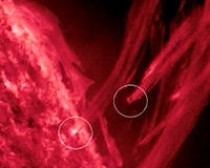

A dire warning came out of a “space weather” conference in Washington, D.C. last week: Around the year 2013, the National Aeronautic and Space Administration (NASA) says solar flare activity on the sun will cause a “space storm” which will have a huge impact on Earth, potentially able to cause 20 times more economic damage than Hurricane Katrina.

The new NASA Solar Dynamics Observatory (SDO), just launched in February, has already recorded massive solar plasma “rainstorms” which luckily, did not come near us. One storm, recorded April 19, took place over four hours, with “plumes” large enough to fit our planet between them.

UK Telegraph:

In a new warning, NASA said the super storm would hit like “a bolt of lightning” and could cause catastrophic consequences for the world’s health, emergency services and national security unless precautions are taken. Scientists believe it could damage everything from emergency services’ systems, hospital equipment, banking systems and air traffic control devices, through to “everyday” items such as home computers, iPods and Sat Navs.

Due to humans’ heavy reliance on electronic devices, which are sensitive to magnetic energy, the storm could leave a multi-billion pound damage bill and “potentially devastating” problems for governments. “We know it is coming but we don’t know how bad it is going to be,” Dr Richard Fisher, the director of NASA’s Heliophysics division, said in an interview with The Daily Telegraph.

“It will disrupt communication devices such as satellites and car navigations, air travel, the banking system, our computers, everything that is electronic. It will cause major problems for the world. Large areas will be without electricity power and to repair that damage will be hard as that takes time.” Dr Fisher added: “Systems will just not work. The flares change the magnetic field on the earth that is rapid and like a lightning bolt. That is the solar effect.”

It warned a powerful solar storm could cause “twenty times more economic damage than Hurricane Katrina”. That storm devastated New Orleans in 2005 and left an estimated damage bill of more than $125bn. Dr Fisher said precautions could be taken including creating back up systems for hospitals and power grids and allow development on satellite “safe modes”. “If you know that a hazard is coming...and you have time enough to prepare and take precautions, then you can avoid trouble,” he added. See more here.

By Andrew Orlowski, theregister.co.uk

Dead broke Spain can’t afford to prop up renewables anymore. The Spanish government is cutting the numbers of hours in a day it’s prepared to pay for “clean” energy.

Estimates put the investment in solar energy in Spain at €18bn - but the investment was predicated, as it is with all flakey renewables, on taxpayer subsidies. With the country’s finances in ruins, making sacrifices for the Earth Goddess Gaia is an option Spain can no longer afford. Incredibly, Spain pays more in subsidies for renewables than the total cost of energy production for the country. It leaves industry with bills 17 per cent higher than the EU average.

“We feel cheated”, Tomas Diaz of the Spanish Photovoltaic Industry Association told Bloomberg. But it’s undoubtedly taxpayers who have been cheated the most.

“Sustainability” has been the magic word that extracted large sums of public subsidy that couldn’t otherwise have been rationally justified using traditional cost/benefit measures. Spain paid 11 times more for “green” energy than it did for fossil fuels. The public makes up the difference. The renewables bandwagon is like a hopeless football team that finishes bottom of the league each year - but claims it’s too special ever to be relegated.

For sure, you can create a temporary jobs boom, but these are artificial, and the exercise is as useful as paying people to dig a hole in the ground, then fill it in. Spanish economist Professor Gabriel Calzada, at the University of Madrid estimated that each green job had cost the country $774,000.

Worse, a “green” job costs 2.2 jobs that might otherwise have been created - a figure Calzada derived by dividing the average subsidy per worker by the average productivity per worker. Industry, which can’t afford to pay the higher fuel bills, simply moves elsewhere.

You can download Calzada’s paper here (pdf) and find more details of the subsidy cuts here.

You can also read how the UK’s “sustainability” mob plan to fleece your wallet, here and here.

-------------

And from Germany:

Campbell Dunford, director of the Renewable Energy Foundation (REF), says that Germany - which has the largest number of wind turbines in Europe - “is building five new coal power stations, which it does not otherwise need, purely to provide covering power for the fluctuations from their wind farms. I am not sure [wind] has been a great success for them.” Mr Dunford claims that Germany’s CO2 emissions have actually risen since it increased its use of wind power. Though the wind itself might, in RUK’s words, be “free,” the cost of backup capacity is likely to be astronomical.

Photo courtesy: Energy Tribune (enlarged here)

The Scientific Alliance June 18, 2010 Newsletter

Many commentators have compared environmentalism to religion. As church attendance in the West has plummeted over the last few decades, so has concern for nature tended to increase. Both trends may be independent results of growing prosperity and security; we feel more in control of our own existence, but at the same time are more aware of our impact on the planet.

But an equally compelling argument is that there is a deep need in the human psyche for a spiritual dimension to our lives and that, if this need is not met via organised religion, then the awe inspired by nature can replace this. One aspect of this manifests itself as respect for the environment and a desire not to cause harm, which is to be welcomed. However, this can be taken to the extreme by ‘deep greens’ for some of whom humans are a blight on the face of the planet, which would be better off without us. That we are apparently the only species with this capacity for self-loathing is worthy of an essay in itself.

But such an attitude - which has much in common with the views of religious ascetics - is itself a manifestation of a generalised guilt, which provides the basis for the Adam and Eve story and the concept of Original Sin. Hardly surprising, then, that some in the UN and EU are suggesting that a whole host of things which are considered bad for the environment might be taxed or even rationed to encourage ‘correct’ behaviour. It’s our fault, and we must pay the price.

This was among the topics discussed at this year’s Brussels Green Week, held earlier this month. Angela Cropper, deputy executive director of the UN development programme (UNEP) presented the new report from the International Panel for Sustainable Resource Management Assessing the Environmental Impacts of Consumption and Production - Priority Products and Materials. This analyses the environmental impact of consumption of food, materials and energy and makes recommendations for greater resource efficiency.

The conclusion is that agriculture (particularly livestock farming), fossil fuel use and metals (particularly iron, steel and aluminium) have the greatest environmental impact. This is hardly surprising: food is essential and energy hardly less so, with - for better or worse - a dependence on coal, gas and oil for the foreseeable future. Iron, steel and aluminium are also ubiquitous as construction materials.

To quote from the report’s executive summary:

“Agriculture and food consumption are identified as one of the most important drivers of environmental pressures, especially habitat change, climate change, water use and toxic emissions. The use of fossil energy carriers for heating, transportation, metal refining and the production of manufactured goods is of comparable importance, causing the depletion of fossil energy resources, climate change, and a wide range of emissions-related impacts.”

Of course, the extent to which any of these areas can be said to ‘harm’ the environment depends on your definition. No-one would argue with this including water and air pollution or localised scarring of the landscape. But anything we do will affect the environment in some way. The focus of this report is mainly on the prosperous countries of the world, which use far more resources than developing countries (although countries such as Brazil, India and China are trying their hardest to catch up). So, the resources used in Europe are much greater than in many parts of the world, but concern for the environment is also much greater.

Air and water quality have improved significantly in the lifetime of most adult Europeans. From a desire after the Second World War to produce as much food as possible, the attitude to farming is now much more one of balancing production and environmental impact. In the poor countries of sub-Saharan Africa, South Africa and other deprived areas of the world, on the other hand, farmland is often badly eroded, water polluted and indoor air dangerously polluted by smoke from cooking fires. Which type of society is having a larger impact on the environment?

But, given the current high-profile concern about the enhanced greenhouse effect warming the planet, environmental assessments like this give a heavy weighting to fossil fuel use. Hence the message from Angela Cropper at Green Week, as quoted by Euractiv: “Put down the steak knife, flip off lights, insulate homes, turn down the thermostat or air conditioner, avoid air travel and park the car as much as possible.”

This message was supported by Environment Commissioner Potcnik at the Commission-organised event. He stressed the need for taxation to guide people towards the correct choices. Others think that moral persuasion is the key. Magda Stoczkiewicz, the director of Friends of the Earth Europe (which receives significant funding from the Commission) spoke of the use of the guilt card alongside taxes to force businesses to increase efficiency.

And to quote Euractiv again “Willy De Backer, head of ‘Greening Europe’ at Friends of Europe, a Brussels-based think-tank, said ‘moral indignation’ is necessary to change consumer behaviour and habits, and ultimately decrease humanity’s impact on nature.” His boss, Gilles Merrit, spoke of problems being linked to democracy and free markets. Overall, the message seems to be that if taxes don’t work, then guilt might. But if that isn’t sufficient, then - as others have said before - perhaps governments should ignore the wishes of electorates.

One mechanism for achieving such political ends has just emerged from a meeting in Busnan, South Korea, again under the auspices of the UNDP (busy people who must have large carbon footprints). This meeting agreed to set up the Intergovernmental Science Policy Platform on Biodiversity and Ecosystem Services (IPBES), to be modelled on the highly influential IPCC (Intergovernmental Panel on Climate Change). The aim is to provide authoritative reports on which governments can base policies to protect biodiversity.

Very few people around the world will have been aware that this body was being formed. But it, the IPCC and probably further ones in future will increasingly influence the environmental policies of our governments. And if that doesn’t work, their pronouncements will be used to make the whole world feel guilty.

By Roger Pielke Sr.

An article has appeared in Nature on May 13 2010 titled

Peter A. Stott and Peter W. Thorne, 2010: How best to log local temperatures? Nature. doi:10.1038/465158a, page 158 [thanks to Joe Daleo for alterting us to this] which perpetuates the myth that the surface temperature data sets are independent from each other.

The authors know better but have decided to mislead the Editors and readers of Nature.

They write “In the late twentieth century scientists were faced with a very basic question: is global climate changing? They stepped up to that challenge by establishing three independent data sets of monthly global average temperatures. Those data sets, despite using different source data and methods of analysis, all agree that the world has warmed by about 0.75 C since the start of the twentieth century (specifically, the three estimates are 0.80, 0.74 and 0.78 C from 1901-2009).”

This is deliberately erroneous as one of the authors of this article (Peter Thorne) is an author of a CCSP report with a different conclusion. With just limited exceptions, the surface temperature data sets do not use different sources of data and are, therefore, not independent.

As I wrote in one of my posts An Erroneous Statement Made By Phil Jones To The Media On The Independence Of The Global Surface Temperature Trend Analyses Of CRU, GISS And NCDC

In the report ”Temperature Trends in the Lower Atmosphere: Steps for Understanding and Reconciling Differences Final Report, Synthesis and Assessment Product 1.1” [a report in which Peter Thorne is one of the authors] on page 32 it is written [text from the CCSP report is in italics]

“The global surface air temperature data sets used in this report are to a large extent based on data readily exchanged internationally, e.g., through CLIMAT reports and the WMO publication Monthly Climatic Data for the World. Commercial and other considerations prevent a fuller exchange, though the United States may be better represented than many other areas. In this report, we present three global surface climate records, created from available data by NASA Goddard Institute for Space Studies [GISS], NOAA National Climatic Data Center [NCDC], and the cooperative project of the U.K. Hadley Centre and the Climate Research Unit [CRU]of the University of East Anglia (HadCRUT2v).”

These three analyses are led by Tom Karl (NCDC), Jim Hansen (GISS) and Phil Jones (CRU).

The differences between the three global surface temperatures that occur are a result of the analysis methodology as used by each of the three groups. They are not “completely independent” This is further explained on page 48 of the CCSP report where it is written with respect to the surface temperature data (as well as the other temperature data sets) that “The data sets are distinguished from one another by differences in the details of their construction.”

On page 50 it is written, “Currently, there are three main groups creating global analyses of surface temperature (see Table 3.1), differing in the choice of available data that are utilized as well as the manner in which these data are synthesized.” and “Since the three chosen data sets utilize many of the same raw observations, there is a degree of interdependence.”

The chapter then states on page 51 that “While there are fundamental differences in the methodology used to create the surface data sets, the differing techniques with the same data produce almost the same results (Vose et al., 2005a). The small differences in deductions about climate change derived from the surface data sets are likely to be due mostly to differences in construction methodology and global averaging procedures.”

and thus, to no surprise, it is concluded that “Examination of the three global surface temperature anomaly time series (TS) from 1958 to the present shown in Figure 3.1 reveals that the three time series have a very high level of agreement.”

Moreover, as we reported in our paper: Pielke Sr., R.A., C. Davey, D. Niyogi, S. Fall, J. Steinweg-Woods, K. Hubbard, X. Lin, M. Cai, Y.-K. Lim, H. Li, J. Nielsen-Gammon, K. Gallo, R. Hale, R. Mahmood, S. Foster, R.T. McNider, and P. Blanken, 2007: Unresolved issues with the assessment of multi-decadal global land surface temperature trends. J. Geophys. Res., 112, D24S08, doi:10.1029/2006JD008229.

“The raw surface temperature data from which all of the different global surface temperature trend analyses are derived are essentially the same. The best estimate that has been reported is that 90–95% of the raw data in each of the analyses is the same (P. Jones, personal communication, 2003).”

Peter Stott and Peter Thorne have deliberately misled the readership of Nature in order to give the impression that three data analyses collaborate their analyzed trends, while in reality the three surface temperature data sets are closely related.

See post here.