Hurricane Trends and Our Climate

Major storms are nature’s way to attempt to reduce temperature imbalances and restore equilibrium. Major winter storms move cold air south and warm air north. Hurricanes transport of excess heat from tropics to higher latitudes. There are more major winter storms when extreme cold comes south and hurricanes in warm years and eras when more heat builds on the tropics.

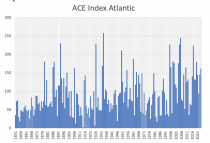

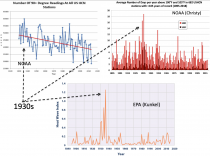

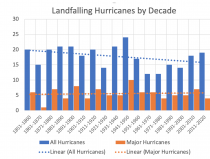

Hurricanes are giant heat engines - the average storm generates heat energy equivalent to all the electric energy produced in the US in an entire year. (Chris Landsea NOAA HRL). See how the hurricane threat diminished during the cold period from the 1960s to 1990s.

Hurricanes form over warm ocean water (primarily over 80F) during the summer into the fall. The activity varies year-to-year and over longer periods as natural equatorial ocean cycles like ENSO (El Nino or La Nina) and multi-decadal cycles in the northern Pacific (Pacific Decadal Oscillation or PDO) and Atlantic (Atlantic Multidecadal Oscillation or AMO) enhance or limit the ocean warming and affect the potential storm activity levels in one or more basins (West Pacific, East/Central Pacific or Atlantic).

There are more major hurricanes when the heat level in the ocean and the atmosphere in the tropics and subtropics is high. Cold periods are less stormy outside the tropics. See the drop in the 1960s to 1990s colder period.

Enlarged

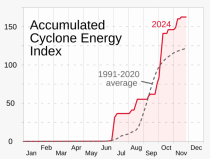

Dr William Gray was a pioneer in hurricane forecasting. Gray and his associates at Colorado State University developed a very useful measure of global hurricane and major hurricane activity, the Accumulated Cyclone Energy (ACE Index). The ACE index takes into account the number, duration, and strength of all tropical storms and hurricanes in each ocean basin during the year.

The ACE Index 1851-2024 for the Atlantic Basin shows major year to year variance with active and quieter years and periods we can tie to natural variations in the ocean and atmosphere.

The trends are flat to down despite the active periods. See more here.

See a detailed analysis here.

See this paper on trends published with the Heritage Foundation.

------------------------

Tornado Trends and Our Climate

Tornadoes occur on every continent but Antarctica, but the most activity is in North America because the conditions that trigger tornadoes are most common here. Here, western mountains and the high plains are often snow covered in winter into the spring keeping air masses cold there while as the sun returns north, temperatures warm in the south and moisture from the warm Gulf of Mexico is readily available. As storms move inland from off the cool eastern Pacific and come down from the mountains, the jet stream tightens and often buckles, draws moisture north and spins up tornadoes. Periods with strong contrasts in air masses and temperatures are associated with an increased threat of tornadoes.

In this backgrounder we will evaluate whether during periods of increased warmth are tornadoes more or less frequent. We will look at both the data provided by the Storm Prediction Center from 1950 to the present and the data since 1973 when tornadoes were first evaluated based on the Fujita tornado intensity scale in their immediate aftermath, making their rating more accurate. Tornado detection improved with the addition of Doppler radar (NEXRAD), the growth of the trained spotter networks, local and national media, storm chasers and the public armed with cell phone cameras and access to social media. In this period, population growth and expansion outside urban areas have exposed more people and buildings to the tornadoes that once roared through open fields.

KEY TAKEAWAYS

* The claim that climate change is causing more and stronger tornadoes is invalidated by the relevant trend data.

* The 10 year running mean had dropped 66% since the peak in the cold mid 1960s.

* The U.S. has not seen an EF5 tornado in 11 years, the longest such streak since at least 1950.

When tornados do occur, expansion of populated areas puts more property and lives at risk. Despite that fact, both inflation-adjusted and normalized tornado damage has decreased in the U.S. since 1950. Data clearly shows tornadoes are more frequent and stronger in colder years and periods (like the 1950s to 1970s).

--------------------

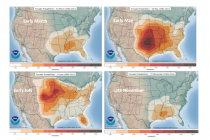

SEASONAL VARIANCE

The threat of tornadoes cycles across different regions of the U.S. from the Gulf coast from November to April, the Plains from May to early June, and the northern Plains and upper Midwest in June and July before most action shifts back to the southeast. Although there are clearly times of the year when tornadoes are most prominent, they can occur any time given the right weather conditions.

March. May, July and November Tornado Climatology

See https://youtu.be/z-oijdylBcQ for animation.

Tornadoes are failing to follow “global warming” based predictions.

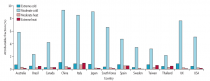

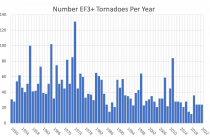

The NOAA Storm Prediction Center (1)provided a history of tornadoes through 2021 that showed after an active period from the 1950s to the 1970s in EF3 level intensity tornadoes, the trend in these strong storms has been down. You do see spikes in some years most notably 2011.

Enlarged

SPC US Annual Count of EF3+ Tornadoes 1950-2022

Note the dozen year long lull followed a very active and deadly strong La Nina of 2010/11, which like the strong La Nina of 1973/74 produced record setting and very deadly outbreaks of tornadoes.

After the 2011 spike, the years 2012, 2013, 2014, 2015, 2016 all saw below average to near record low tornado counts in the U.S. since records began in 1954. 2017 rebounded only to the long-term mean while 2018 activity returned to well below the 25th percentile. 2019 bounced to the 75th percentile with a major outbreak centered on Easter Sunday. The following three years saw it drop well below average. In 2021 a long track tornado and major deadly December outbreak occurred but the annual count remained below the 25th percentile. 2022 started strong in March and early April but activity declined as dry and warmer conditions developed in the central states. It too ended well below the 25th percentile with only 24 reported deaths for the nation.

Data Reliability - Improved Detection

Coleman and Dixon (2014)2 and others found that prior to 1973, tornadoes were likely overrated due to the use of news clippings, sensational journalism, etc. But starting in 1973, tornadoes were evaluated based on the Fujita tornado intensity scale in their immediate aftermath, making their rating more accurate. They found that indeed after NEXRAD radars went online nationwide in the early 1990s, the detection of all tornadoes, including weak ones became more likely. “This radar system alerts the NWS to areas with possible tornadoes (including weak tornadoes), even in unpopulated areas, that the NWS would have never found before the radar system. Also, since the early 1990s, with the Verification of the Origins of Rotation in Tornadoes Experiment (VORTEX; Rasmussen et al.)3 and the movie Twister, tornado chasing has become almost ubiquitous during days with tornado risk, especially in the Great Plains. This has also reduced the likelihood of unreported tornadoes.

See much more .

See this paper on tornado trends published with the Heritage here,

-----------------------

Claim: Global Warming has increased U.S. Wildfires

REBUTTAL

In the U.S., wildfires are in the news every late summer and fall. The National Interagency Fire Center has recorded the number of fires and acreage affected since 1985. These data show that the trend in the number of fires is actually down while the trend in the acreage burned has increased.

Enlarged

In the past, lightning and campfires caused most forest fires; today most are the result of power lines igniting trees. The power lines have increased proportionately with the population, so it can be reasoned that most of the damage from large wildfires in California is partially a result of increased population not Global Warming. The increased danger is also greatly aggravated by poor government forest management choices.

“In the United States, wildfires are also due in part to a failure to thin forests or remove dead and diseased trees”. In 2014, forestry professor David B. South of Auburn University testified to the U.S. Senate Environment and Public Works Committee that “data suggest that extremely large megafires were four-times more common before 1940,” adding that “we cannot reasonably say that anthropogenic global warming causes extremely large wildfires.” As he explained, “To attribute this human-caused increase in fire risk to carbon dioxide emissions is simply unscientific.”

The NWS tracks the number of days where weather (not forest) conditions are conducive to wildfires such that they issue red-flag warnings. The number of red-flag days has not trended upward due to “Global Warming.”

90% of the fires are caused by humans though natural seasonal weather variations create conditions that are conducive to fires and the rapid spread of these fires west to increasingly populated areas. Human-caused fires result from campfires left unattended, the burning of debris, downed power lines, negligently discarded cigarettes, and intentional acts of arson.

Bjorn Lomborg overlapped National Interagency Fire Center (NIFC) annual US fire data with the Historical Statistics of the United States - Colonial Times to 1970. There we have statistics for area burnt since 1926 and up to 1970. Reassuringly, the data for 1960-1970 ‘completely overlap.’ This is the same data series. Professor Lomborg said. It shows recent forest fire activity is one-fifth the record since 1926 even with the recent increases in acreage burnt.

We can see prior to 1880, wildfires were more common. Sweetnam looked at long-term incidence of wildfires in North America and found they have declined the last century.

See more here.

Note: See the Timeline of Temperature measurements over the years here. See why for many reasons, it can’t be validated.

--------------------

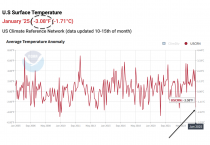

The cold January of 2025

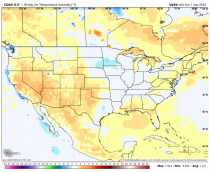

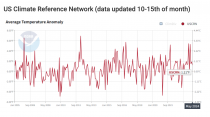

January Anomalies for the USHCN (US Climate Reference Network) - US averaged 3.08F below normal, among the top 10 coldest in the records.

The U.S. Climate Reference Network (USCRN) is a systematic and sustained network of climate monitoring stations with sites across the contiguous U.S., Alaska, and Hawaii. These stations use high-quality instruments to measure temperature, precipitation, soil conditions, and more. USCRN provides a continuous series of climate observations to monitor national climate trends and support climate-impact research.

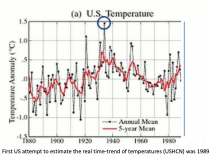

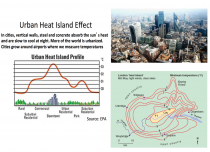

The network is much less subject to local Urban Heat Island elevating night-time readings. Ironically, the first Climate Data Center knew this and attempted to adjust for UHI.

NOAA National Climate Data C...6F/century)… The rate may have increased after the 1950s, commensurate with the large growth in and around airports (up from 20% to over 70%)” Karl 1988.

“Our results and those of others show that this ‘growth’… is serious and must be taken into account ...assessing temperature...”

The first ever monthly US plot with the adjustment looked like this.

The NYT noticed a lack of warming in the data (1989 was just after Hansen’s 1988 congressional ‘show’ with AC off and windows open in the 1988 heat wave).

We in the forecasting industry (I was at TWC still in 1989. JB at ACCU) knew about UHI.

Listen to most any forecast on TV and radio and you will see or hear something like this.

“Low tonight near 40 in town but some upper 20s in the rural cold spots. High tomorrow in the mid 50s”.

That is the UHI story

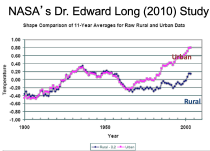

Some at NASA illustrated the issue with ignoring UHI.

\

\

Enlarged

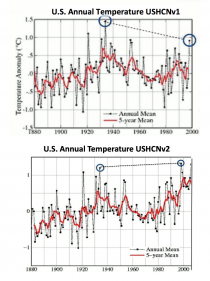

Inexplicably, the UHI adjustment Karl argued for was removed in USHCNv2 over decade later.

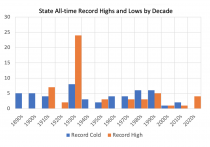

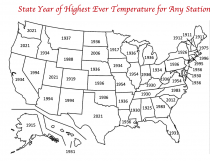

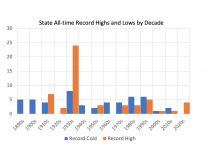

The state all-time records clearly show the 1930s as the hottest decade from the records (not adjusted for UHI)

Enlarged

The UHI warming is mostly at night. The range for all stations from warmest ever to coldest ever is well above 100F.

(1) History shows that mortality is 20 times greater for cold than warm for 13 major countries in all climate zones.

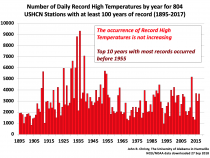

(2) Heat records are not increasing. Heat was a far worse problem a century ago.

(3) Multi-decadal cycles of warming and cooling can be explained by solar and ocean cycles and/or volcanism. Much of the apparent warming shown in global surface data constructions relate to urban heat island effect, which elevates nighttime lows. Data sites designed to avoid contamination show no warming since their inception more than 16 years ago.

(4) Cold temperatures have a far greater negative economic impact than warmer conditions. See more here.

(5) The overestimation of greenhouse warming and heat related mortality can be attributed to poor modeling

See more details in this report.

------------------------

Please SUPPORT ICECAP if you can with the Paypal link on the left. We are working to update our case that climate changes can be explained by natural cycles and that these same factors can be used to predict ahead with more skill than the models. I have been doing that since first inspired by my heroes like Jerome Namias. ln my lifetime, I have witnessed and spent a career explaining what was behind the weather regimes. In the 1950s, we were worried about heat and hurricanes, in the 1970s even Mr Spock was doing shows on the coming ice age (LINK). Since the 1990s we have been told heat would get us and we would be underwater in NYC by the 2020s.

I am sure you have been shocked by the wacky weather the last few years including the historic snowstorm on the Gulf coast. I have spent my career trying to understand what factors explain the cycles to get a leg up on forecasting what is ahead. Icecap has been a one man show with excellent contributed posts from former students and friends I respect for their good work and courage. We have reached 312 million page views. Our library and search engine is searchable with well over 10,000 entries. We have lost many of my career associates the last 10 years. Many of these giants in our field can be found in our library. As I have moved away from some of my daily efforts I hope to find partners to take the work we have done and build the future ICECAP. Contact me at jdaleo6331@aol if you have ideas or questions.

See story on big changes coming mid-month at Weatherbell or Linkedin

WEATHER 2024 IN REVIEW

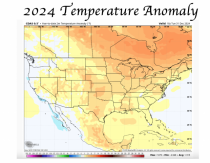

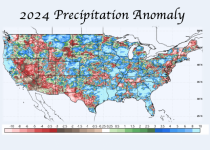

2024 was a warm year from eastern and central Canada into the central and northeast US.

Summer was near normal central into the east, warmer southwest.

It was dry southwest and spots to the east. Some eastern areas went weeks without rainfall after a wetter 2023 and start to 2024 in the mid to late summer.

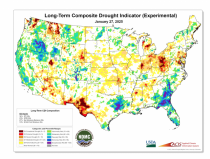

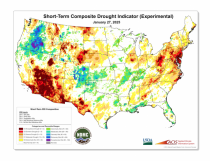

Drought persisted in the eastern Ohio Valley to Mid-Atlantic and spots to the southwest. It was very wet western and eastern Gulf and southeast thanks to hurricanes.

The drought worsened and sparked large fires as the Santa Ana winds came on strong.

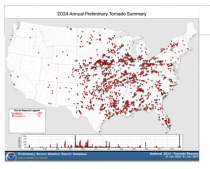

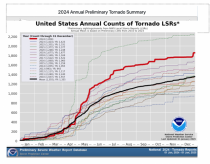

Tornadoes which has in recent years focused on the southeast more than the traditional Tornado Alley as Tim Coleman as shown was the recent trend, It shifted northwest into the central in 2024.

The tornado count was 2nd highest of recent years.

Hail was a problem in many areas. It did damage at my home in NH, but I was not alone by. any means.

‘

JB warned in December 2023 that 2024 would be big trouble for the Gulf. It started in June but eased off until late September and October. In the end it was an impactful season with an above normal ACE. The Atlantic Basin was the most active region in 2024.

Overall, the 2024 Atlantic hurricane season had above-normal activity in terms of the number of named storms, hurricanes, and major hurricanes. In 2024, there were 18 named storms that formed in the Atlantic basin, of which 11 became hurricanes and 5 strengthened into major hurricanes (category 3 or higher on the Saffir-Simpson Hurricane Wind Scale). These numbers are greater than the long-term (1991-2020) averages of 14 named storms, 7 hurricanes, and 3 major hurricanes. In terms of Accumulated Cyclone Energy (ACE), which measures the strength and duration of tropical storms and hurricanes, activity in the basin in 2024 was about 34 percent above the long-term (1991-2020) average.

Eighty-five named storms occurred across the globe in 2024, which was slightly below the 1991-2020 average of 88. Forty-two of those reached tropical cyclone strength (≥74 mph), and 23 reached major tropical cyclone strength (≥111 mph). These also included four storms that reached Category 5 (≥157 mph) on the Saffir-Simpson hurricane wind scale. The global accumulated cyclone energy (ACE) was about 21% below the 1991-2020 average.

As noted, the North Atlantic had 18 named storms, which was above the 1991-2020 average of 14. Eleven of those were hurricanes, including five major hurricanes. The ACE was about 32% above normal. Most of the damage in the U.S. was attributable to two major hurricanes: Helene in September and Milton in October.

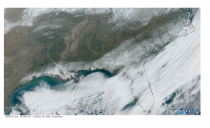

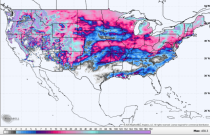

As we moved into winter and 2025, an amazing cold shot and historic snowstorm in the south made the news.

A snowy street in Pensacola, Florida Jan. 22, 2025

Bloomberg noted: The region’s worst snowstorm in 130 years has seen as much as 10 inches pile up in New Orleans, according to public reports, smashing a record of 2.7 inches set in 1963. Temperatures fell to 26F (minus 3C) overnight. Similar accumulations of snow were recorded in Pensacola, Florida, and more than four inches hit the Houston area.

MSN news reported “In Louisiana, deep mounds of snow met the warm waters of the Gulf of Mexico. In New Orleans, locals skied down Bourbon Street as the airport recorded 8 inches, smashing the daily record of 2.7 inches.

By the end of Tuesday, New Orleans had observed more snow in this single event than New York City and Anchorage did in all of December and January - and according to the data, the area around the city may have been Tuesdays snowiest place, with an estimated total of just over a foot.

\In Alabama, there was a snowball fight on the beach. The Gulf Coast had its first blizzard warning on record.

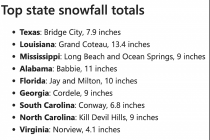

As an AP story in the Winnipeg Free Press observed: “Milton is just northeast of Pensacola, where 8.9 inches (22.6 centimeters) shattered the city’s previous all-time snow record of 3 inches (7.6 centimeters) set in 1895. The table below shows how much snow fell from the storm in the south.

The snow resulted in record breaking cold temperatures across the deep south. It was warmer in Anchorage, Alaska than Atlanta, New Orleans, Charlotte NC and Jacksonville Fl. In Alabama, the low of 6F tied for the third lowest temperature for mobile, Al, set in 1899 according to the NWS. In Louisiana, in Lafayette, the low of 4 degrees broke a record back to 1899.

2024/25 Snow Season to Date

See full story here

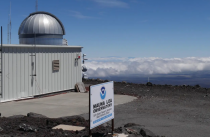

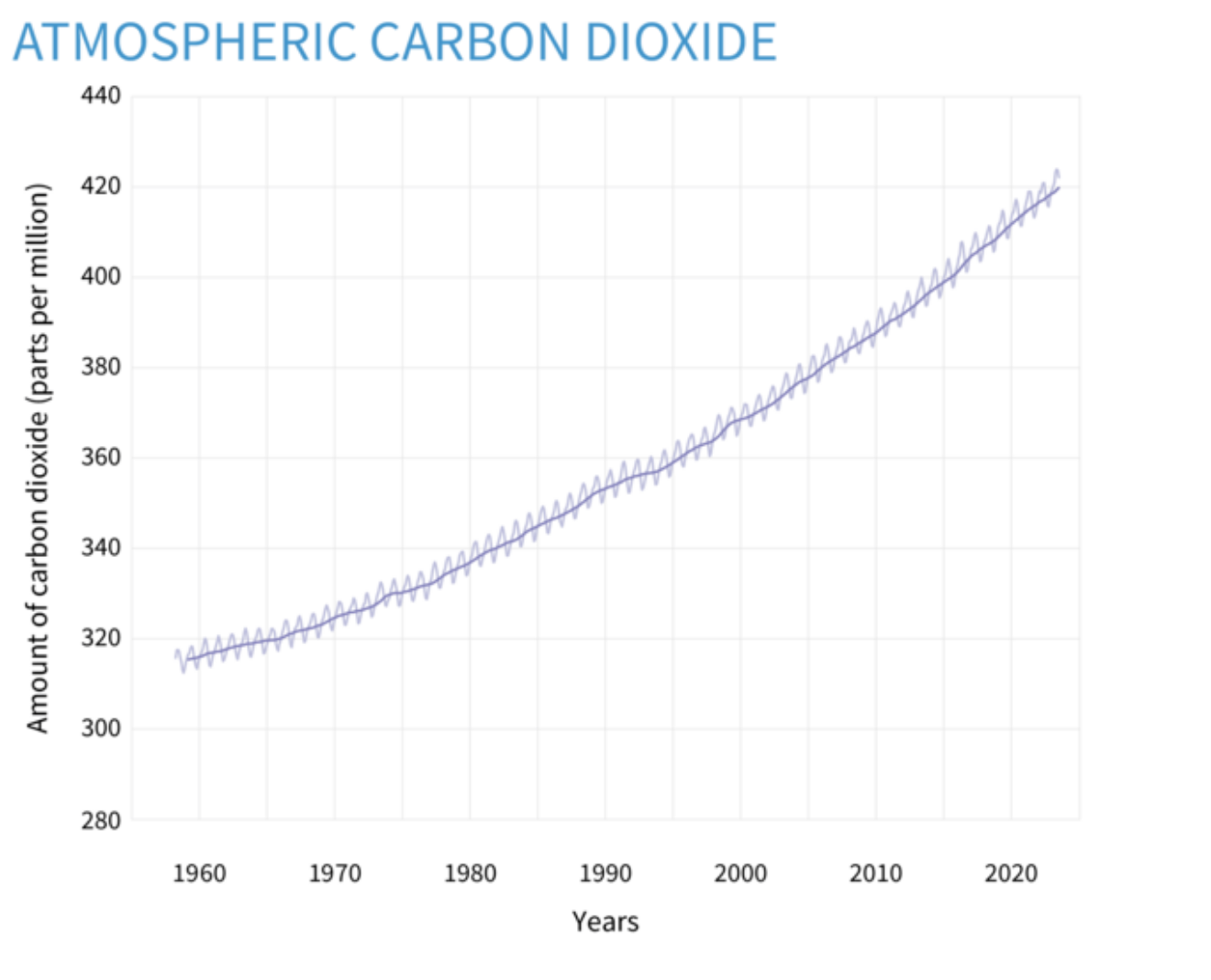

The official site for annual CO2 is Mauna Loa. The station at 11,300 feet high (3,444 meters), has a 131-foot (40-meter) tower that collects air to measure levels of carbon dioxide.

In 1958, Charles Keeling choose to install an atmospheric carbon dioxide monitoring system on Mauna Loa, a volcanic peak on the Big Island of Hawaii as the remote location would allow only carbon dioxide that had mixed with the atmosphere to be measured.

The latest annual numbers are around 420 which averages 0.04% of the air. Levels are lowest during July as vegetation is using it in photosynthesis and releasing O2.

It varies greatly where we actually live because when we breathe in the air with just 0.04% (420) ppm CO2), when we breathe out, we release 42,000 ppm. CO2 levels are much higher in populated areas and especially when people congregate (churches, schools, restaurants. even you home when the family and pets are there).

ITS NOT POLLUTION

HOME AND OFFICE AND SCHOOLS

Levels exceeding 2000 ppm are found in small offices and Ken. C., a science teacher writes, “In one classroom of 30 students after lunch reached CO2 levels of 4,825 ppm with the door closed. According to ASHRAE, the effects of poor indoor air quality in classrooms has been known for years. Chronic illnesses, reduced cognitive abilities, sleepiness, and increased absenteeism have all been attributed to poor IAQ. There is no direct harm from CO2, the claim is that it reduces oxygen levels.

CARS, TRAINS AND PLANES AND SUBMARINES

Studies found carbon dioxide levels rise to over 3,000 ppm in 30 minutes in an enclosed automobile with a single passenger. In airplane cabins it may rise to 1700 ppm. The alarmists are more interested in the emission from the planes into the atmosphere and want those that fly (and drive or ride the rails) to be held accountable (see the proposed 50 tonnes CO2 per person as a lifetime limit here).

In submarines, levels range up to 11,300 ppm .

EDUCATING THE POPULACE

I have always considered myself an environmentalist and conservationist as well as a Meteorologist and Climatologist. I worked on my doctorate with an atmospheric chemistry grant.

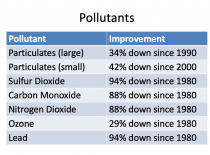

In the post WWII boom, we had problems with air pollution from factories, coal plants, cars, inefficient home heating systems and incinerators in apartments. We had serious air quality issues with pollutants. We had problems with particulates, sulfur dioxide, carbon monoxide, nitrogen oxides, ozone and lead. The worst episodes that really drove efforts to fight pollution were from a atmospheric chemical reactions - cold season water droplets in fog mixed with SO2 to cause sulfuric acid mist. Smog events in Donora PA 1948 led to 6,000 of the 14,000 population to experienced damaged lungs, and the Great London 4 day 1952 Smog Event produced between 10-12,000 deaths.

Events like that still occur in China.

We set standards that had to be met by industry and automakers. After my BS and MS work at Wisconsin in Meteorology, I received a grant to study Air Resources/Pollution at NYU while I worked 7 days a week producing the weather for WCBS TV and radio and the CBS TV Network on the Special series on Energy. Many of my colleagues took jobs dealing with air quality at the EPA and elsewhere, After the work we all did there and at many schools on pollution, we have the cleanest air in my lifetime and here in the U.S. in the world today.

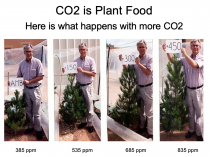

CO2 IS THE GAS OF LIFE

Notice CO2 was not on the list. CO2 is a trace gas (.04% of our atmosphere). It is NOT a pollutant but a beneficial gas. CO2 is essential for photosynthesis. CO2 enriched plants are more vigorous and have lower water needs, are more drought resistant. Ideal CO2 levels for crops would be 3 to 4 times higher. They pump CO2 into greenhouses!

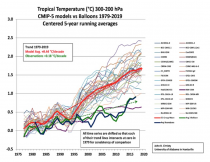

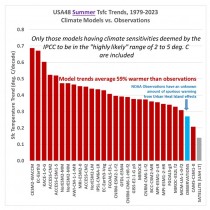

The climate models used to predict the impacts of increasing CO2 deliver warming over 2 times that observed by our NOAA orbiting satellite measurements of the air above the boundary layer where the greatest changes occur diurnally.

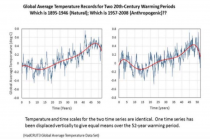

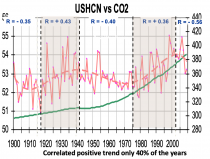

The apparent weak correlation to temperatures may be mostly the timing of the natural cycles. Longer term warming correlates with CO2 increases only 40% of the time.

Enlarged

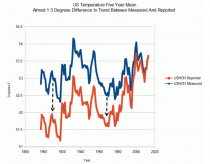

Sadly there have been changes that minimized the warm period from the 1920s to 1940s to try to make the case stronger.

CONCLUSION: CO2 IS NOT POLLUTION BUT A VALUABLE PART OF LIFE ON OUR PLANET

I have 2 CO2 monitors - I bought one - actually using Amazon credits and it arrived the next morning. One high quality model was donated to me to use by the CO2 coalition. I found with my daughter and 2 small dogs in the room, levels rise to over 800. At a football gathering of 8, it rose from 420 to near 1700 ppm. Had our team been doing better, we may have had a gathering with twice a many people and CO2 levels would have been well over 2000. I used and talked about our findings at a church organized meeting.

Many people confuse/conflate CO2 with the potentially deadly CO. That included a decade ago the chair of NH Science and Energy committee when I was one of the testifiers. She said she was taught CO2 was a health hazard (confusing with CO). If you search Amazon for CO2 monitors they show you only choices for the harmful carbon monoxide labelled s CO2.

I found the real story can influence people with open minds. If the CO2 is seen to be locally much higher where people congregate, I am a bit afraid the radical movement and would take that fact on as another cause and try to enforce extreme measures (limiting driving, flying, congregating in large events), to pretend it will keep levels low and it becomes another costly program with much more harm than benefit as their assault on fossil fuel energy usage and the whole COVID episode has been the last 4 years.

-------------

From the CO2 Coalition

According to Patrick Moore, chairman and chief scientist of Ecosense Environmental and co-founder of Greenpeace, the climate change messaging isn’t based in fact.

“The whole thing is a total scam,” said Mr. Moore. “There is actually no scientific evidence that CO2 is responsible for climate change over the eons.”

Mr. Moore said that over the past few decades, the climate message has continually changed; first, it was global cooling, then global warming, then climate change, and now it’s disastrous weather.

This is from Epoch Times and pay walled. Here is a pdf of the article.

------------

I over the years gave many talks on climate - see a recent 50 minute story on local cable.

Here is a much needed compilation from highest level scientists willing to tell the real story. New documentary, “Climate: The Movie” (2024), features Dr. Willie Soon from CERES.

A new documentary on climate change by Martin Durkin, “Climate: The Movie”, was posted online today (March 21st, 2024). The film presents a different perspective on climate change from the standard narratives promoted by the UN’s Intergovernmental Panel on Climate Change (IPCC).

Dr. Willie Soon, CERES co-team leader, was interviewed for this documentary, along with many other scientists and commentators. The 19th century philosopher, John Stuart Mill, noted in 1859 that “he who knows only his own side of the case knows little of that”. This documentary presents a different “side of the case” on climate change, and we think it is definitely worth watching and sharing with anybody who wants to hear different perspectives.

See and share this documentary below.

--------

Three projects I have worked on for the last 2 years have been completed. Together with my regular daily reports on Weatherbell with Joe Bastardi and other superstars, it limited my time on Icecap. I also struggled with the loss of my dear wife Emily, my lifelong companion. I will return to more regular posting and updating the important Alarmist Claim rebuttal work. I appreciate your donations to support the site. SInce our inception, we have had an amazing 301 million views. Thank you!

Steve Goreham in the Washington Examiner,

The next big climate scare is on the way. Advocates of measures to control the climate now propose that we begin counting deaths from climate change. They appear to believe that if people see a daily announcement of climate deaths, they will be more inclined to accept climate change policies. But it’s not even clear that the current gentle rise in global temperatures is causing more people to die.

In December, former Secretary of State Hillary Clinton spoke at COP28, the 28th United Nations Climate Conference, and mentioned climate-related deaths.

“We are seeing and beginning to pay attention and to count and record the deaths that are related to climate,” she said. “And by far the biggest killer is extreme heat.”

---------

See the CO2 coalition video using the actual facts to clearly show the fallacy of this alarmist media and psuedoscientist claims that Steve discusses here:

------------

According to Ms. Clinton, Europe recorded 61,000 deaths from extreme heat in 2023, and she estimated that about 500,000 people died from heat across the world last year.

Global temperatures have been gently rising for the last 300 years. Temperature metrics from NASA, NOAA, and the Climate Research Unit at the University of East Anglia in the United Kingdom estimate that Earth’s surface temperatures have risen a little more than one degree Celsius, or about two degrees Fahrenheit, over the last 140 years. But are these warmer temperatures harmful to people?

According to the Centers for Disease Control and Prevention, most cases of influenza occur during December to March, the cold months in the United States. Influenza season in the southern hemisphere takes place during the cold months there, April through September. The peak months for COVID-19 infections tended to be the cold periods of the year. More people usually get sick during cold months than in warm months.

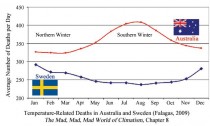

More people also die during winter months than summer months, according to many peer-reviewed studies. For example, Dr. Matthew Falagas of the Alfa Institute of Medical Sciences and five other researchers studied seasonal mortality in 11 nations. The research showed that the average number of deaths peaked in the coldest months of the year in all of them.

Enlarged: A graph showing the number of countries/regions in the winter

The late Dr. William Keating studied temperature-related deaths in six European countries for people aged 65 to 74. He concluded that deaths related to cold temperatures were nine times greater than those related to hot temperatures. Dr. Bjorn Lomborg, president of the Copenhagen Consensus Center, has pointed out that moderate global warming will likely reduce human mortality.

Yet, on January 30, Dr. Colin J. Carlson of Georgetown University published a paper in Nature Medicine titled, “After millions of preventable deaths, climate change must be treated like a health emergency.” Carlson claims that climate change has caused about 166,000 deaths per year since the year 2000, or almost four million cumulative deaths.

Carlson admits that most of these deaths have been due to malaria in sub-Saharan Africa, or malnutrition and diarrheal diseases in south Asia. But he goes on to claim that deaths due to natural disasters and even cardiovascular disease should also be attributed to climate change. If death from cardiovascular disease can be counted as a climate death, almost any death can be counted.

The evidence doesn’t support these climate death claims. Malarial disease has plagued humanity throughout history, even when temperatures were colder than today. Dr. Paul Reiter, medical entomologist at the Pasteur Institute in Paris, points out that malaria was endemic to England 400 years ago during the colder climate of the Little Ice Age. The Soviet Union experienced an estimated 13 million cases of malaria during the 1920s, with 30,000 cases occurring in Archangel, a city located close to the frozen Arctic Circle.

Malnutrition has been declining during the gentle warming of the last century. During the early 1900s, as many as 10 million people would die from famine each decade globally. Today, world famine deaths have been reduced to under 500,000 people per decade. About 10% of the world’s people are malnourished today, but this is down from about 25% in 1970.

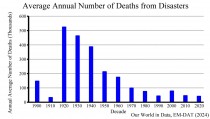

The number of deaths from natural disasters has also been falling during the warming over the last century. According to EM-DAT, the International Disaster Database, the deaths from disasters, including storms, famines, earthquakes, droughts, and floods, are down more than 90 percent over the last 100 years.

With deaths from natural disasters and famine declining, and since fewer people die in warmer temperatures, the case for counting deaths from global warming is poor at best. But don’t underestimate the ability of climate alarmists to create fear by exaggerating the data.

Steve Goreham is a speaker on energy, the environment, and public policy and the author of the new bestselling book Green Breakdown: The Coming Renewable Energy Failure.

------------------

Icecap Note: Any warming not related to ocean, solar cycles or volcanism is driven by urbanization. NCEI has a data set(s) that are protected from the urban warming contamination by better instrumentation and especially better siting.

In the very first US operational data set in the late 1980s, adjustments were built in to correct for urbanization in the national network in growing cities and 70% of the network stations that were airport. In the following versions the original adjustment algorithms were removed and a data trace that better mapped the AGW claims of man made CO2 warming. Fortunately NOAA with a push from Dr. John Christy, set up a network of carefully sited stations as he used in Alabama where he was the state climatologist. NOAA never discusses this data gem but makes the data available monthly if you can find it on their site. See even with the warming globally that may be driven in part the last few years by TONGA (see) and also on WUWT, the warming trend is minimal.

May 2024 | 1.21F (0.67C)

US Climate Reference Network (data updated 10-15th of month)

Click for description of the data/larger graph

The US Climate Reference Network record from 2005 shows no obvious warming during this period. The graph above is created monthly by NOAA.

The graph shows the Average Surface Temperature Anomaly for the contiguous United States since 2005. The data comes from the U.S. Climate Reference Network (USCRN) which is a properly sited (away from human influences and infrastructure) and state-of-the-art weather network consisting of 114 stations in the USA.

These station locations were chosen to avoid warm biases from Urban Heat Islands (UHI) effects as well as microsite effects as documented in the 2022 report Corrupted Climate Stations: The Official U.S. Surface Temperature Record Remains Fatally Flawed. Unfortunately, NOAA never reports this data in their monthly or yearly “state of the climate report.” And, mainstream media either is entirely unaware of the existence of this data set or has chosen not to report on this U.S. temperature record.

The national USCRN data, updated monthly as shown in the above graph can be viewed here and clicking on ClimDiv to remove that data display in the graph here.

---------

See a history of weather data changes and manipulations over time here.

They ignore the actual heat records. The 1930s were clearly the hottest years for all-time state record highs. There have been more record lows than all time highs since then.

Largest Protest in Human History is Under Way in Germany Against the Global Warming Cult

One of the most well-coordinated and massive protests in human history is happening in Europe right now. Blue collar and white collar workers are protesting the EU’s global warming insanity in an ingenious manner that has basically crippled the German economy.

If you haven’t heard anything about this here in America yet, that is because most of our media is on the side of globalist tyranny and against the people. The protests were initially described as another “farmer protest” in Germany, but they have grown into something much larger than that now.

Farms have been under a full-on assault by the global warming cultists and the WEF for a while now. Joe Biden’s “climate envoy” John Kerry has been running around claiming that family farms are going to have to be confiscated from people if we are going to save the planet from the weather. He actually says that. Out loud.

In the EU, that insanity is becoming a reality. The Irish government, for example, passed a law demanding that 1.3 million cows be slaughtered, so that their farts won’t hurt the weather. If the disruption to the global food supply causes lots of people to starve to death, well, that’s a feature of saving the planet from cow farts. You’ve got to break a few eggs in order to make a communist omelet and so forth.

The government has already killed about 10% of the livestock in the Netherlands. That’s a big part of the reason why the “farmer protests” happened in that country. World governments are basically telling “the little guy” that if they lose their livelihood or if they die, that’s a small price to pay for the greater good - for the earth.

Which brings us to the German protests this week.

The German government is trying to impose new restrictions on farms that will basically put small farms out of business. This is exactly the type of policy that John Kerry, who has never been on a farm in his life, is talking about.

Here’s how the amazing German protests have been unfolding.

On day one, the farmers started the protest. They drove their tractors into the cities and started blocking transport hubs, main arteries, and access to government buildings. It’s a nuisance, but the globalists figure they can outlast the farmers while vilifying them and calling them “threats to democracy.”

On day two...a huge number of Polish truck drivers crossed the border into Germany and joined the protest. They blocked even more main highways and shut down the border crossings in and out of Germany. If you’re a globalist in the German government, at this point you’ve got to be thinking, “Uh oh!”

On day three...all the rail workers in Germany went on strike in solidarity with the farmers. Now there are no trains running. The subways are not taking workers to the office. Those trains and trucks loaded with groceries that restock the store shelves in the cities are not coming. And Berlin has about three days’ worth of food on the grocery shelves. Who do you think is going to blink first in this situation?

If the trains aren’t running, then transport companies are forced to use trucks. But the truckers are standing in solidarity with the farmers. The farmers are blocking off the highways and main roads.

How long do you suppose people in Berlin will be willing to go hungry before they step out in solidarity against their fascist, globalist government?

The globalists like to pretend that “the little guy” doesn’t matter. They’re about to learn a painful lesson in Germany. The most important person in your society is not some government bureaucrat with a master’s degree in city administration who works in the Redundant Department of Official Redundancy.

The most important people in society have always been the guys who grow and provide the milk, eggs, cheese, steak, chicken, bread, salads, and bacon (especially bacon) that we all need to survive.

Globalism and neoliberalism have been abject failures. This is why nationalism is the way forward out of the mess that these people have made of the world. It’s why the people are revolting against their governments, by electing nationalist and populist leaders like Javier Milei and Donald Trump.

Tucker Carlson brings us a snapshot of these amazing, multi-dimensional protests in Germany in this short clip: One of the most well-coordinated and massive protests in human history is happening in Europe right now. Blue collar and white collar workers are protesting the EU’s global warming insanity in an ingenious manner that has basically crippled the German economy.

If you haven’t heard anything about this here in America yet, that’s because most of our media is on the side of globalist tyranny and against the people. The protests were initially described as another “farmer protest” in Germany, but they have grown into something much larger than that now.

Farms have been under a full-on assault by the global warming cultists and the WEF for a while now. Joe Biden’s “climate envoy” John Kerry has been running around claiming that family farms are going to have to be confiscated from people if we are going to save the planet from the weather. He actually says that. Out loud.

In the EU, that insanity is becoming a reality. The Irish government, for example, passed a law demanding that 1.3 million cows be slaughtered, so that their farts won’t hurt the weather. If the disruption to the global food supply causes lots of people to starve to death, well, that’s a feature of saving the planet from cow farts. You’ve got to break a few eggs in order to make a communist omelet and so forth.

The government has already killed about 10% of the livestock in the Netherlands. That’s a big part of the reason why the “farmer protests” happened in that country. World governments are basically telling “the little guy” that if they lose their livelihood or if they die, that’s a small price to pay for the greater good-for the earth.

Which brings us to the German protests this week.

The German government is trying to impose new restrictions on farms that will basically put small farms out of business. This is exactly the type of policy that John Kerry, who has never been on a farm in his life, is talking about.

Here’s how the amazing German protests have been unfolding.

On day one, the farmers started the protest. They drove their tractors into the cities and started blocking transport hubs, main arteries, and access to government buildings. It’s a nuisance, but the globalists figure they can outlast the farmers while vilifying them and calling them “threats to democracy.”

On day two...a huge number of Polish truck drivers crossed the border into Germany and joined the protest. They blocked even more main highways and shut down the border crossings in and out of Germany. If you’re a globalist in the German government, at this point you’ve got to be thinking, “Uh oh!”

On day three...all the rail workers in Germany went on strike in solidarity with the farmers. Now there are no trains running. The subways are not taking workers to the office. Those trains and trucks loaded with groceries that restock the store shelves in the cities are not coming. And Berlin has about three days’ worth of food on the grocery shelves. Who do you think is going to blink first in this situation?

If the trains aren’t running, then transport companies are forced to use trucks. But the truckers are standing in solidarity with the farmers. The farmers are blocking off the highways and main roads.

How long do you suppose people in Berlin will be willing to go hungry before they step out in solidarity against their fascist, globalist government?

The globalists like to pretend that “the little guy” doesn’t matter. They’re about to learn a painful lesson in Germany. The most important person in your society is not some government bureaucrat with a master’s degree in city administration who works in the Redundant Department of Official Redundancy.

The most important people in society have always been the guys who grow and provide the milk, eggs, cheese, steak, chicken, bread, salads, and bacon (especially bacon) that we all need to survive.

Globalism and neoliberalism have been abject failures. This is why nationalism is the way forward out of the mess that these people have made of the world. It’s why the people are revolting against their governments, by electing nationalist and populist leaders like Javier Milei and Donald Trump.

Tucker Carlson brings us a snapshot of these amazing, multi-dimensional protests in Germany in this short clip:

See also Public Charging Stations Turn into Electric ‘Car Graveyards’ in Bitter Chicago Cold here

See this by Robert Bryce on “Tesla In Turmoil: The EV Meltdown In 10 Charts” and the Manhattan Contrarian post here on the demise of the US green energy transition.

Weather Rant by Professor Art Horn, Meteorologist AMS

“What is necessary for the very existence of science and what the characteristics of nature are, are not to be determined by pompous preconditions, they are determined always by the material with which we work, by nature herself.” - Dr. Richard Feynman

Published at theartofweather.net

Tuesday September 5th, 2023

Love that quote by the good doctor above. It’s especially relevant to the story below! Be it scientific journals or from the sea of both established and fledgling media companies, they all have one goal it would seem and it’s not the truth but perhaps just part of the truth so that fits their predetermined narrative. Is a lie of omission really a lie or is it a willful desire to deceive (a lie) by appearing to tell the truth?

The story below is also instructive in that it should make one aware of the fact that the big government that is now in power is throwing BILLIONS of dollars at climate scientists and research facilities via the EPA, The National Science Foundation and a myriad of other federal agencies to propel their narrative that climate change is a serious threat to our future.

Follow

Top scientist Patrick Brown says he deliberately OMITTED key fact in climate change piece he’s just had published in prestigious journal to ensure woke editors ran it - that 80% of wildfires are started by humans

Story by Lewis Pennock For Dailymail.Com

A climate change scientist has claimed the world’s leading academic journals reject papers which don’ ‘support certain narratives’ about the issue and instead favor ‘distorted’ research which hypes up dangers rather than solutions.

Patrick T. Brown, a lecturer at Johns Hopkins University and doctor of earth and climate sciences, said editors at Nature and Science - two of the most prestigious scientific journals - select ‘climate papers that support certain preapproved narratives’.

In an article for The Free Press, Brown likened the approach to the way ‘the press focus so intently on climate change as the root cause’ of wildfires, including the recent devastating fires in Hawaii. He pointed out research that said 80 percent of wildfires are ignited by humans.

Brown gave the example of a paper he recently authored titled ‘Climate warming increases extreme daily wildfire growth risk in California’. Brown said the paper, published in Nature last week, ‘focuses exclusively on how climate change has affected extreme wildfire behavior’ and ignored other key factors.

Brown laid out his claims in an article titled ‘I Left Out the Full Truth to Get My Climate Change Paper Published’. ‘I just got published in Nature because I stuck to a narrative I knew the editors would like. That’s not the way science should work,’ the article begins.

‘I knew not to try to quantify key aspects other than climate change in my research because it would dilute the story that prestigious journals like Nature and its rival, Science, want to tell,’ he wrote of his recently-published work.

‘This matters because it is critically important for scientists to be published in high-profile journals; in many ways, they are the gatekeepers for career success in academia. And the editors of these journals have made it abundantly clear, both by what they publish and what they reject, that they want climate papers that support certain preapproved narratives - even when those narratives come at the expense of broader knowledge for society.

“To put it bluntly, climate science has become less about understanding the complexities of the world and more about serving as a kind of Cassandra, urgently warning the public about the dangers of climate change. However understandable this instinct may be, it distorts a great deal of climate science research, misinforms the public, and most importantly, makes practical solutions more difficult to achieve.’

A spokesperson for Nature said ‘all submitted manuscripts are considered independently on the basis of the quality and timeliness of their science’.

‘Our editors make decisions based solely on whether research meets our criteria for publication - original scientific research (where conclusions are sufficiently supported by the available evidence), of outstanding scientific importance, which reaches a conclusion of interest to a multidisciplinary readership,’ a statement said.

‘Intentional omission of facts and results that are relevant to the main conclusions of a paper is not considered best practice with regards to accepted research integrity principles,’ the spokesperson added.

Science was approached for comment.

Brown opened his missive with links to stories by AP, PBS NewsHour, The New York Times and Bloomberg which he said give the impression global wildfires are ‘mostly the result of climate change’.

He said that ‘climate change is an important factor’ but ‘isn’t close to the only factor that deserves our sole focus’.

Much reporting of the wildfires in Maui has said climate change contributed to the disaster by helping to create conditions that caused the fires to spark and spread quickly.

The blazes, which killed at least 115 people, are believed to have been started by a downed electricity line, but observers have said rising temperatures caused extremely dry conditions on the Hawaiian island.

Brown said the media operates like scientific journals in that the focus on climate change ‘fits a simple storyline that rewards the person telling it’.

Scientists whose careers depend on their work being published in major journals also ‘tailor’ their work to ‘support the mainstream narrative’, he said.

‘This leads to a second unspoken rule in writing a successful climate paper,’ he added. ‘The authors should ignore - or at least downplay - practical actions that can counter the impact of climate change.’

He gave examples of factors which are ignored, including a ‘decline in deaths from weather and climate disasters over the last century’. In the case of wildfires, Brown says ‘current research indicates that these changes in forest management practices could completely negate the detrimental impacts of climate change on wildfires’.

Poor forest management has also been blamed for a record number of wildfires in Canada this year.

But ‘the more practical kind of analysis is discouraged’ because it ‘weakens the case for greenhouse gas emissions reductions’, Brown said.

Successful papers also often use ‘less intuitive metrics’ to measure the impacts of climate change because they ‘generate the most eye-popping numbers’, he said.

He went onto to claim that other papers he’s written which don’t match a certain narrative have been ‘rejected out of hand by the editors of distinguished journals, and I had to settle for less prestigious outlets’.

Brown concluded: ‘We need a culture change across academia and elite media that allows for a much broader conversation on societal resilience to climate. The media, for instance, should stop accepting these papers at face value and do some digging on what’s been left out.’

‘The editors of the prominent journals need to expand beyond a narrow focus that pushes the reduction of greenhouse gas emissions. And the researchers themselves need to start standing up to editors, or find other places to publish.’

ICECAP NOTE: See a telling media story on the deadly Maui wildfires real causes here and more here.

------------

By Francis Menton,

We highly recommend this site for science and data based related stories on climate and energy policies in this world where “stupidity rules”.

On May 23, EPA put out its long-expected proposed Rule designed to eliminate, or nearly so, all so-called “greenhouse gas” emissions from the electricity-generation sector of the economy. The proposal came with the very long title: “New Source Performance Standards for GHG Emissions from New and Reconstructed EGUs; Emission Guidelines for GHG Emissions from Existing EGUs; and Repeal of the Affordable Clean Energy Rule.” The full document is 672 pages long.

Various not-very-far-off deadlines are set, ranging from as early as 2030 for some changes to coal plants, to at the latest 2038 for the last changes to natural gas plants. But how exactly is this emissions elimination thing to be accomplished? Today a substantial majority of U.S. electricity (about 60%) comes from one or the other of those fuels; and it is inherent in the burning of hydrocarbons that you get CO2 as a product. In all those 672 pages, EPA has only two ideas for how to eliminate the carbon emissions from combustion power plants: carbon capture and storage (CCS), and “green” hydrogen. Either you must implement one of those two ideas to meet EPA’s standards by the deadline, or you must close your power plant. But here’s the problem: both of those ideas are, frankly, absurd.

The deadline for commenting on the proposed Rule was August 8, although comments have continued to pile in after that date. Many hundreds of them have been received. If you have nothing else to do for the next month or two, you can review the comments at this link.

I have by no means made the effort to read all the comments, but I have gone looking for some of the more significant ones. Two that I can highly recommend are this one by a group of 21 red state AGs led by West Virginia, and this one by an overlapping group of 18 red state AGs led by Ohio. Both of those comments do an excellent job of dismantling the concept that either CCS or “green” hydrogen could ever work as a significant part of our electricity generation system. Of the two, the West Virginia comment is the much longer (54 pages) and goes into far more technical detail. But the Ohio comment, at 21 pages, has its share of good zingers as well.

The Ohio and West Virginia comments label the idea of CCS at the high rate demanded by EPA (90%) as either “infeasible” or not “viable,” and include recitations of the history of failed attempts to implement this frankly useless technology. From the Ohio comment (page 4):

A study of 263 carbon-capture-and-sequestration projects undertaken between 1995 and 2018 found that the majority failed and 78% of the largest projects were cancelled or put on hold. After the study was published in May 2021, the only other coal plant with a carbon-capture-and-sequestration attachment in the world, Petra Nova, shuttered after facing 367 outages in its three years of operation.

With the closure of Petra Nova, there remains in the entire world exactly one operating commercial CCS facility at a coal power station, the SaskPower Boundary Dam Unit 3 in Saskatchewan, Canada. That one is supposed to achieve the 90% capture rate that EPA demands, but with constant operating issues it has fallen way, way short:

[T]his [SaskPower] facility is the world’s only operating commercial carbon capture facility at a coal-fired power plant. And it has never achieved its maximum capacity. It also battled significant technical issues throughout 2021- to the point that the plant idled the equipment for weeks at a time. As a result, the plant achieved less than 37% carbon capture that year despite having an official target of 90%…

The West Virginia comment provides lots more technical detail on the failures of CCS. Why can’t a CCS system just easily suck up all the CO2 out of a power plant’s emissions stream? Because the effort to suck up the emissions takes energy from the output of the plant, and the higher the percentage of carbon emissions you seek to capture, the more of the energy output of the plant you consume. (I have previously described CCS efforts as a “war against the second law of thermodynamics."). In the limiting case, you can use up all the power output of the plant on the CCS system and still not capture 100% of the CO2. From the West Virginia comment, page 24-25:

Take efficiency to start. CCS units run on power, too. An owner can get that power from the plant itself. But this approach makes the plant less efficient by increasing its “parasitic load"-and CCS more than triples combustion turbines’ normal parasitic load… This is the cause the Wyoming study analyzed that showed installing CCS technology would devastate plants’ heat rates and lower net plant efficiency by 36%.

And that percentage relates to a system that captures well less than 100% of the plant’s carbon emissions. And these are only the start of the technical issues to be faced. For example, once you have captured all this CO2, where do you put it? Do you build an entire new national network of pipelines (at a cost of hundreds of billions of dollars) to transport it to some underground caverns somewhere? And then, are there environmental issues with the chemicals used to snag the CO2 out of the power plant’s emissions stream? From the West Virginia comment, page 27:

The Proposed Rule would force utilities to adopt and communities to accept all aspects of CCS technology without fully understanding the ramifications. For example, the environmental and health effects of CANSOLV-the leading amine-based and EPA-recommended CCS solvent, 88 Fed. Reg. at 33,291- appear unknown; leading CANSOLV studies over the past decade don’t discuss its impact.

And then, if you have to increase the power output of the plant by 50% or so to power the CCS facility, doesn’t that then increase the emissions of nitrous oxides and particulates by a comparable amount? From the West Virginia comment, page 27:

Nearly a decade ago, the European Union’s European Environmental Agency released a study finding that CCS would increase “direct emissions of NOx and PM” by nearly a half and a third, respectively, because of additional fuel burned, and increase “direct NH3 emissions” “significantly” because of “the assumed degradation of the amine-based solvent.”

It goes on and on from there. And then there’s the idea of “co-firing” the power plants with “green” hydrogen, produced by using wind or solar power or something else magical to electrolyze water. EPA’s proposed Rule would impose such a requirement on existing natural gas plants to take them up to 96% hydrogen by 2038. A few insights from the West Virginia comment, page 35:

Most combustion turbines on the market today cannot handle anything more than a 5-10% blend [of hydrogen]; 20% is generally accepted as the absolute technological ceiling… Even in the best scenarios, a hydrogen volume fraction of 20% is usually the most technology currently can do.

And how about the problem (and cost) of producing the amounts of hydrogen that would be required. From the West Virginia comment, page 37:

America currently produces just .5% of the clean hydrogen we need under the Proposed Rule. The industry would have to close a 99.5% supply gap in just 15 years. EPA has offered no evidence showing that this gap will close.

There is much, much more on issues like transporting and handling the hydrogen, cost of production, and so forth.

The conclusion is obvious and impossible to escape: These proposed methods to allow combustion power plants to continue to exist are not real and can never work. EPA intends to force the closure of all such electricity generation facilities. Will we have an electricity system that can still function at that point? They neither know nor care. After all, we have a planet to save here.

Somehow, in the weighing of the costs and benefits here, the bureaucrats appear to have completely lost track of the enormous benefits that reliable access to electricity has brought to the people. They will destroy that without giving the subject a second thought.

By Steve Goreham

Originally published in The Western Journal.

Which is more environmentally friendly, an energy source that uses one unit of land to produce one unit of electricity, or a source that uses 100 units of land to produce one unit of electricity? The answer should be obvious. Nevertheless, green energy advocates call for a huge expansion of wind, solar, and other renewables that use vast amounts of land to replace traditional power plants that use comparatively small amounts of land.

Vaclav Smil, professor emeritus of the University of Manitoba in Canada, extensively analyzed the power density of alternative sources used to generate electricity. He defined the power density of an electrical power source as the average flow of electricity generated per square meter of horizontal surface (land or sea area). The area measurement to estimate power density is complex. Smil included plant area, storage yards, mining sites, agricultural fields, pipelines and transportation, and other associated land and sea areas in his analysis.

Smil’s work allows us to compare the energy density of electricity sources. If we set the output of a nuclear plant to one unit of land required for one unit of electricity output, then a natural gas-powered plant requires about 0.8 units of land to produce the same one unit of output. A coal-fired plant uses about 1.4 units of land to deliver an average output of one unit of power.

But renewable sources require vastly more land. A stand-alone solar facility requires about 100 units of land to deliver the same average electricity output of a nuclear plant that uses one unit of land. A wind facility uses about 35 units of land if only the concrete wind tower pads and the service roads are counted, but over 800 units of land for the entire area spanned by a typical wind installation. Production of electricity from biomass suffers the poorest energy density, requiring over 1,500 units of land to output one unit of electricity.

As a practical example, compare the Ivanpah Solar Electric Generating System in the eastern California desert to the Diablo Canyon Nuclear Plant near Avila Beach, California. The Ivanpah facility produces an average of about 793 gigawatt-hours per year and covers an area of 3,500 acres. The Diablo Canyon facility generates about 16,165 gigawatt-hours per year on a surface area of 750 acres. The nuclear plant delivers more than 20 times the average output on about one-fifth of the land, or 100 times the power density of the solar facility.

To approach 100 percent renewable electricity using primarily wind and solar systems, the land requirements are gigantic. “Net-Zero America,” a 2020 study published by Princeton University, calls for wind and solar to supply 50 percent of US electricity by 2050, up from about 14 percent today. The study estimated that this expansion would require about 228,000 square miles of new land (590,000 square kilometers), not including the additional area needed for transmission lines. This is an area larger than the combined area of Illinois, Indiana, Iowa, Kentucky, West Virginia, and Wisconsin. This area would be more than 100 times as large as the physical footprint of the coal and natural gas power systems that would be replaced.

The land taken for wind and solar can seriously impact the environment. Stand-alone solar systems blanket fields and deserts, blocking sunlight and radically changing the ecosystem, and driving out plants and animals. Since 2000, almost 16 million trees have been cut down in Scotland to make way for wind turbines, a total of more than 1,700 trees felled per day. This environmental devastation will increase the longer Net Zero goals are pursued.

Wind and solar are dilute energy. They require vast amounts of land to generate the electricity required by modern society. Without fears about human-caused global warming, wind and solar systems would be considered environmentally damaging. Net-zero plans for 2050, powered by wind and solar, will encounter obstacles with transmission, zoning, local opposition, and just plain space that are probably impossible to overcome.

Steve Goreham is a speaker on energy, the environment, and public policy and the author of the new book Green Breakdown: The Coming Renewable Energy Failure.

by Ron Barmby

Political tunnel vision on global warming has resulted in declaring increases in atmospheric carbon dioxide an existential threat. But the United Nations’ resolve to reduce carbon dioxide levels runs counter to its goals to end world hunger, promote world peace and protect global ecosystems. It fails to address the key question relating to those three goals: Which pathway creates the greatest good to the greatest multitude - reducing or increasing CO2?

The numbers since the year 2000 provide convincing evidence that increasing carbon dioxide has positive impacts and reducing carbon emissions entails dire consequences.

World Hunger

The pre-industrial (circa 1850) atmospheric CO2 concentration of 280 ppm (parts per million) compares to today’s 420 ppm, a 50% increase. Meanwhile, the global population has risen 560%, from 1.2 billion to 8 billion.

Those extra 6.8 billion people are mostly being fed, and it’s not all because of human agricultural productivity, pest control and plant genetics.

Observations of Earth’s vegetative cover since the year 2000 by NASA’s Terra satellite show a 10% increase in vegetation in the first 20 years of the century. Clearly, something other than agriculture is helping to improve overall plant growth.

In a recent study supported by the U.S. Department of Energy, Dr. Charles Taylor and Dr. Wolfram Schlenker quantified how much of that extra greening resulted in food for human consumption since 2000. Using satellite imagery of U.S. cropland, they estimated that a 1 ppm increase in CO2 led to an increase of 0.4%, 0.6% and 1% in yield for corn, soybeans and wheat, respectively. They also extrapolated back to 1940 and suggested that the 500% increased yield of corn and 200% increased yield of soybeans and winter wheat are largely attributable to the 100 ppm increase in CO2 since then.

CO2 fertilization is not only greening the Earth, it’s feeding the very fertile human race.

World Peace

Though adding CO2 to the atmosphere does not promote world peace, attempts to stop CO2 emissions in the western democracies have increased the CO2 emissions, wealth and influence of totalitarian Russia and China.

Eurostat, the statistical office of the European Union (EU), reports that the EU’s reliance on imported natural gas increased from 15.5% of its energy needs in 2000 to 22.5% by 2020. Russia was the main supplier of Europe’s natural gas. Holding Europe’s energy security in its pipelines not only helped finance Russia’s 2021 invasion of Ukraine, but it also limited the economic sanctions Europe could impose in retaliation.

According to the scientific online publication Our World in Data, between 2000 and 2020 the G7 nations lost 13.8% of the world share of GDP and China picked up 12%.

The West (the EU plus the UK, U.S., Canada and Japan) transferred GDP growth to China and energy security to Russia and was able to reduce CO2 emissions from 45% of the global total in 2000 to 25% in 2020. In the same period China’s CO2 emissions grew from 14% of the total to 31%, leading to an increase of 39% in total CO2 global emissions.

The unintended consequence of the West’s attempts to reduce CO2 emissions has been to shore up Chinese and Russian dictatorships-and in Russia’s case, to partly fund the invasion of a sovereign and democratic neighbor, Ukraine.

World Ecology

Much of the human footprint on Earth is where the products we consume originate: We either grow them on the planet’s surface or extract them from within its crust.

In testimony to the U.S. House Committee on Energy and Commerce in 2021, Mark Mills, a senior fellow at the Manhattan Institute, estimated that replacing each unit of hydrocarbon energy by “clean tech” energy would on average result in the extraction of five to 10 times more materials from the Earth than does hydrocarbon production.

Mills also pointed out that Chinese firms dominate the production and processing of many critical rare earth elements and that nearly all the growth in mining is expected to be abroad, increasingly in fragile, biodiverse wilderness areas.

Decarbonization will impose the heavy environmental cost of an unprecedented increase in mining.

One Last Number

Since El Nino induced a modern peak global average temperature in 1998, global warming has been essentially zero.

The numbers don’t lie. Allowing more CO2 emissions is better for ending world hunger, promoting world peace, and protecting global ecosystems.

This commentary was first published at Real Clear Energy, July 6, 2023, and can be accessed here.

Ron Barmby, a Professional Engineer with a master’s degree in geosciences, had a 40-year career in the energy industry that covered 40 countries and five continents. He is author of “Sunlight on Climate Change: A Heretic’s Guide to Global Climate Hysteria” and is a proud member of the CO2 Coalition, Arlington, Virginia.

Scientific Paper Concludes Refrigerants’ Gases Pose No Threat to Climate

CO2 Coalition, 28 June

The paper examines the warming potential of 14 halogenated gases, which are more specifically identified as hydrochlorofluorocarbons (HCFCs) and hydrofluorocarbons (HFCs). The gases are to be phased out as refrigerants because of their purported threat of dangerously warming the climate. The ban on these chemicals is being imposed under amendments to a 1987 international agreement known as the Montreal Protocol, which forced the abandonment of freon as a refrigerant because of concerns about that chemical’s capacity to destroy ozone in the upper atmosphere. The U.S. Senate ratified the so-called Kilgali amendment to the Montreal Protocol in 2022. Drs. Van Wijngaarden and Happer conclude that the 14 gases’ contribution to atmospheric warming amounts to only 0.002 degrees C per decade, a warming indistinguishable from zero.

-----------

School Official Laments Cost and Performance of Electric Buses

Heather Hamilton, Washington Examiner, 29 May

One Michigan school official admitted his district’s move to using electric was less than the government has touted it to be. “Electric buses are approximately five times more expensive than regular buses, and the electrical infrastructure, which was originally estimated to be only about $50,000, give or take, for those four buses ended up being more like $200,000.

------------

Wind Fails Texas Again

Bill Peacock, Master Resource, 26 June

Wind’s market share is far higher in temperate months than in the hot summer months or the cold winter months when loads are heavy and generation is needed. In March, April, May, October, and November, wind’s market share is 31.7%. In the winter months of December, January, and February, wind’s market share drops to 25.7%. In the summer months of June, July, August, and September, wind’s market share plummets to 18.1%.

-----------

Baseball Sized Hail Smashing into Panels at 150 MPH Destroys Scottsbluff Solar Farm

Kevin Killough, Cowboy State Daily, 27 June

Baseball-sized hail took out a solar farm in Scottsbluff, Nebraska, on Friday. The hail shattered most of the panels on the 5.2-megawatt solar project, sparing an odd panel like missing teeth in a white smile. “Just by looking at it, it looks destroyed to me,” [Scottsbluff city manager Kevin] Spencer said.

-----------

London ULEZ Expansion Will Force 40% of Drivers To Give Up Their Cars in August

Felix Reeves, Express, 23 June

A new study has found that two in five Londoners will be forced to change or give up their current car under the expansion of the Ultra Low Emission Zone coming into force in just over two months.

-----------

EU Looks Into Blocking Out the Sun as Climate Efforts Falter

John Ainger, Bloomberg Green, 26 June

The European Union will join an international effort to assess whether large-scale interventions such as deflecting the sun’s rays or changing the Earth’s weather patterns are viable options for fighting climate change.

By Meteorologist Art Horn

“The opinions that are held with passion are always those for which no good ground exists; indeed the passion is the measure of the holders lack of rational conviction.”

-Bertrand Russell 10/10/21

Published at theartofweather.net

Wednesday June 7th, 2023

There’s just too much going on not to talk about it. To quote Jack Klompus from Seinfeld “I’m sorry. I’m sitting here, the whole meeting, holding my tongue. I’ve known you a long time Morty, but I cannot hold it in any longer.”

I’m referring to the current situation of the Canadian wildfires and the resulting and persistent smoke issues down here in the United States. Under normal conditions a large very slow-moving storm rotating counterclockwise in the Canadian Maritimes would be circulating cool, dry, clean Canadian air into the New England. There is such a storm present in that location now. But with all these fires in Quebec Province the storm that is situated in the Maritimes is doing the opposite! The smoke from the fir...ta. In court on February 15th, 2022 she was convicted on four counts of mischief against property in connection with multiple arson fires in the Bonnyville area of Alberta Canada. She actually admitted to setting 32 fires at the hearing! Apparently she was quite proud of her destruction. She was sentenced to 9 months in jail but amazingly she served no jail time.

Can I prove that at least some of these current fires have been deliberately set by eco-terrorists? No, not until someone claims responsibility or is arrested but it seems plausible to me.

Why would someone want to destroy forests that they claim they are trying to save? At least part of the answer is that by starting the fires and then blaming the cause on climate change they get the news coverage they couldn’t get any other way. The news media actually does the work for them since they are obsessed with climate change causing everything! Today every natural disaster they report about is now the result of climate change.

As a side note I saw yet another of the seemingly endless stories about sea ice loss in the Arctic. This story from the thedailybeast.com reports that another computer model study forecasts that after the year 2030 there will be no sea ice in the Arctic Ocean by the end of the summer.

Of course, one has to only go back to 2007 when there were similar predictions warning that by 2022 summer sea ice would be long gone from the Arctic. Another in the long line of climate change forecasts fails. Actually, sea ice data from the Danish Meteorological Institute reveals that as of today the amount of Arctic sea ice is within 75 percent of the 1981 to 2010 average for this time of year. Where are you going to see that in the news? Nowhere!

-----------

Note: Mark Perry on Carpe Diem here listed 18 major failures.

“Most of the people who are going to die in the greatest cataclysm in the history of man have already been born,” wrote Paul Ehrlich in a 1969 essay titled “Eco-Catastrophe! “By....[1975] some experts feel that food shortages will have escalated the present level of world hunger and starvation into famines of unbelievable proportions. Other experts, more optimistic, think the ultimate food-population collision will not occur until the decade of the 1980s.”

Erlich sketched out his most alarmist scenario for the 1970 Earth Day issue of The Progressive, assuring readers that between 1980 and 1989, some 4 billion people, including 65 million Americans, would perish in the “Great Die-Off.” “It is already too late to avoid mass starvation,” declared Denis Hayes, the chief organizer for Earth Day, in the Spring 1970 issue of The Living Wilderness.

Leonard Nimoy talked about the coming ice age in 1979 here.

Tony Heller exposes the fraudsters here.

Unlike the government, businesses must respect reality or they will go bankrupt.

The reality is that atmospheric CO2 is remarkably beneficial. Exxon knows that the byproducts of burning fossil fuels, carbon dioxide and water, are more than completely benign. They are essential. All life on this planet comes from carbon dioxide and water. If we combine those two ingredients, we get soda water. But if we give them to a plant in the presence of sunlight, it will produce glucose and from that other sugars, starches, fats, proteins, and cellulose. In other words, all life.

What about warming from the Greenhouse Effect? CO2 is a greenhouse gas like water vapor. But water vapor completely dominates that effect, so much that climate alarmists quietly assume that it will produce most of the warming from rising CO2 in the atmosphere. But water vapor is self limiting, such that it does not produce catastrophic warming.

One important way that water vapor controls warming is through the formation of clouds that reflect sunlight back to space, before they can heat the ground.

The most recent winner of the Nobel Prize in Physics, John Clauser, thinks that clouds are the key to understanding our climate, not carbon dioxide. I agree with him and would add the entire hydrological cycle to the negative feedbacks that keep our climate stable.

John Clauser has joined us as a Director of the CO2 Coalition to refute the vast nonsense coming from the scientifically illiterate.

Exxon should be commended for finally standing up to the climate idiots, just like John Clauser is doing.

--------------

Exxon Says World Hitting Mythical “Net-Zero” by 2050 is Nonsense

May 19, 2023

Finally, at least one Big Oil company is willing to go on the record countering the mass insanity of the left. The left wants humanity to kill itself in order to achieve mythical “net zero” (no extra carbon emissions) by 2050. Leftist lunatics have tried to brainwash the world that if we don’t hit net zero (which is impossible), that’s it. Lights out. The world toasts, and we burn ourselves to a cinder. There is no hope for the future. Which is complete nonsense. Exxon said as much in an official statement to shareholders in an SEC filing.

Proxy adviser Glass Lewis told Exxon (and shareholders) the company should prepare for the end of the world-as in the use of fossil fuels will be completely phased out by 2050, leaving Exxon without a business if it doesn’t change its product lines. After picking themselves up off the floor from laughing so hard, Exxon told Glass Lewis, via a response in an SEC filing (below), they are (our words) smoking something funny.

“Glass Lewis apparently believes the likelihood of the IEA NZE scenario is well beyond what the IEA itself contends: that the world is not on the NZE path and that this is a very aggressive scenario,” Exxon said. “It is highly unlikely that society would accept the degradation in global standard of living required to permanently achieve a scenario like the IEA NZE.”