Nov 10, 2013

Super Typhoon Haiyan/Yolanda, another overhyped storm that didn’t match early reports

ICECAP UPDATE: Note a big fan of the MSM in covering severe weather but 4 stars to Jason Samenow and Dennis Mersereau of the CWG on WAPO for these two apolitical stories” Why so many people died from Haiyan and past southeast Asia typhoons and Typhoon and hurricane storm surge disasters are unacceptable. Maybe now that Andrew Freeman has moved over to Climate Central, the other scientists can do more honest and balanced coverage.

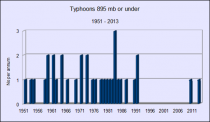

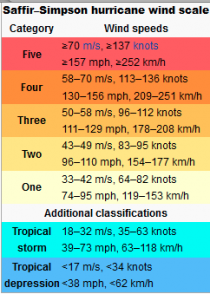

Note the claim that storms are becoming more extreme is challenged by this graph of 35 typhoons with an atmospheric pressure of 895 mb or less, the figure measured for Yolanda.The chart below plots these by year.

Enlarged

------

Here is the sort of headlines we had Friday, for example this one from Huffington Post where they got all excited about some early reports from The Weather Company’s Andrew Freedman:

Super Typhoon Haiyan - which is one of the strongest storms in world history based on maximum windspeed - is about to plow through the Central Philippines, producing a potentially deadly storm surge and dumping heavy rainfall that could cause widespread flooding. As of Thursday afternoon Eastern time, Haiyan, known in the Philippines as Super Typhoon Yolanda, had estimated maximum sustained winds of 195 mph with gusts above 220 mph, which puts the storm in extraordinarily rare territory.

UPDATE 5: from this NYT article:

Before the typhoon made landfall, some international forecasters were estimating wind speeds at 195 m.p.h., which would have meant the storm would hit with winds among the strongest recorded. But local forecasters later disputed those estimates. “Some of the reports of wind speeds were exaggerated,” Mr. Paciente said. The Philippine weather agency measured winds on the eastern edge of the country at about 150 m.p.h., he said, with some tracking stations recording speeds as low as 100 m.p.h.

Ah those wind speed estimates, they don’t always meet up with reality later - Anthony

==========

By Paul Homewood

Sadly it appears that at least 1000 1200* lives have been lost in Typhoon Yolanda (or Haiyan), that has just hit the Philippines. There appear to have been many unsubstantiated claims about its size, though these now appear to start being replaced by accurate information.

Nevertheless the BBC are still reporting today

Typhoon Haiyan, one of the most powerful storms on record to make landfall...The storm made landfall shortly before dawn on Friday, bringing gusts that reached 379km/h (235 mph).

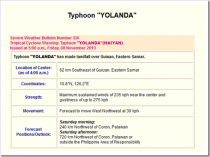

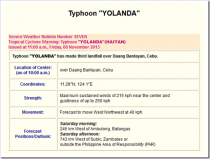

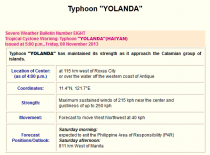

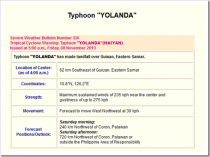

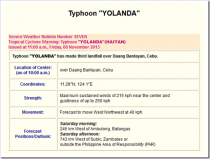

Unfortunately we cannot always trust the BBC to give the facts these days, so let’s see what the Philippine Met Agency, PAGASA, have to say. Here are the surface wind reports:

Enlarged

Enlarged

Enlarged

So at landfall the sustained wind was 235 kmh or 147 mph, with gusts upto 275 kmh or 171 mph. This is 60 mph less than the BBC have quoted.

The maximum strength reached by the typhoon appears to have been around landfall, as the reported windspeeds three hours earlier were 225 kmh (140mph).

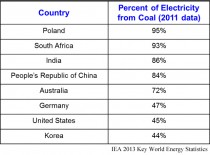

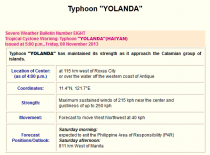

Terrible though this storm was, it only ranks as a Category 4 storm, and it is clear nonsense to suggest that it is “one of the most powerful storms on record to make landfall”.

Safir-Simpson Scale

Given the geography of the Pacific, most typhoons stay out at sea, or only hit land once they have weakened. But in total terms, the busiest typhoon season in recent decades was 1964, whilst the following year logged the highest number of super typhoons (which equate to Cat 3 +). Of the eleven super typhoons that year, eight were Category 5’s.

http://en.wikipedia.org/wiki/Typhoon

So far this year, before Yolanda there have been just three Category 5’s, none of which hit land at that strength.

Personally I don’t like to comment on events such as these until long after the dust has settled. Unfortunately though, somebody has to set the record if we cannot rely on the BBC and others to get the basic facts right.

UPDATE

In case anyone thinks I am overreacting, take a look at the Daily Mail headlines.

Just looking at it again, is it possible the MSM are confusing mph with kmh? It seems a coincidence that PAGASA report 235 kmh.

UPDATE 2

I have just registered a complaint at the Press Complaints Commission against the Mail article. If anyone spots similar articles elsewhere, and I will add them to my complaint.

UPDATE 3

I seem to have been right about the kmh/mph confusion!

I’ve just scanned down the Mail article and seen this.

Unless they think"gusts" are less than “winds”, it looks like someone has boobed.

===========

UPDATE4: Kent Noonan writes in with this addition -

CNN has had several articles stating the same numbers for wind speed as BBC and Mail. I saw these numbers first last night at 10PM Pacific time.

Today’s story: “Powered by 195-mph winds and gusts up to 235 mph, it then struck near Tacloban and Dulag on the island of Leyte, flooding the coastal communities.”

If these ‘news’ agencies don’t issue a correction, we will be forever battling the new meme of “most powerful storm in world history”.

Look at today’s google search for “most powerful storm”

“All you need to know Typhoon Haiyan, world’s most powerful storm” by FP Staff Nov 8, 2013

Read more .

Then they go on to correctly state gusts to 170mph !!

UPDATE 6: (update 5 is at the head of the post)

BBC now reporting reduced wind speeds that would make it a Cat4 storm:

Typhoon Haiyan, one of the most powerful storms on record to make landfall, swept through six central Philippine islands on Friday. It brought sustained winds of 235km/h (147mph), with gusts of 275 km/h (170 mph), with waves as high as 15m (45ft), bringing up to 400mm (15.75 inches) of rain in places.

Source . (h/t David S)

==========

* Reports are varying wildly

The Red Cross in the Philippines says 1200 in this report .

But now Reuters is claiming and estimate of 10,000 based on a late night meeting of officials at the Governors Office .

About the same time as the Reuters 10K report, television News in the Philippines says the death toll is 151.

Early reports often vary widely, and it will be some time before accurate numbers are produced.

Our hearts and prayers go to the Philippine people. For those that wish to help, here is the website of the Philippine Red Cross

Nov 01, 2013

Global Warming Alarmists Are Overrun By The Facts

The global warming alarmists continue to go about their business - which is minding everyone else’s business - while their yarn keeps fraying. Their latest problem: a study that says nature, not man, drives climate.

Last week President Obama issued the executive order “Preparing the United States for the Impacts of Climate Change.”

It’s almost 3,000 words outlining a plan to help the country get through “prolonged periods of excessively high temperatures” and “more heavy downpours” as well as “an increase in wildfires, more severe droughts, permafrost thawing, ocean acidification and sea-level rise.”

The order even insists that these dire conditions “are already affecting communities, natural resources, ecosystems, economies, and public health across the nation.”

Clearly the White House missed the news - isn’t that where Obama has learned about various scandals that have suffused his administration? that there has been no warming since 1997.

What’s more, it’s also missed the news about a peer-reviewed paper that recently appeared in the journal Climate Dynamics. According to the science, the pause in warming that began as temperatures leveled off in the late 1990s could extend into the 2030s.

Paper authors Judith Curry, head of the School of Earth and Atmospheric Sciences at the Georgia Institute of Technology, and Marcia Wyatt, from the Department of Geological Sciences at the University of Colorado-Boulder, found - no surprise here - that the United Nations climate models that predict a scorched Earth are not reliable.

“The growing divergence between climate model simulations and observations raises the prospect that climate models are inadequate in fundamental ways,” says Curry.

What Curry and Wyatt see, and which the models could not project, given the junk that was fed into them, is a natural cycle of warming and cooling.

The summary of the paper describes a “‘stadium-wave’ signal that propagates like the cheer at sporting events” that covers “the Northern Hemisphere through a network of ocean, ice, and atmospheric circulation regimes that self-organize into a collective tempo.”

This “wave periodically enhances or dampens the trend of long-term rising temperatures, which may explain the recent hiatus in rising global surface temperatures,” the summary said.

The paper also explains that “declining sea ice extent over the last decade is consistent with the stadium wave signal.”

What’s more, “the wave’s continued evolution portends a reversal of this trend of declining sea ice.”

And the role of man’s greenhouse-gas emissions on sea ice decline? Apparently it’s not so significant.

While Wyatt says “the stadium wave signal does not support or refute anthropogenic global warming,” Curry promises that “this paper will change the way you think about natural internal variability,” a factor that the alarmists tend to deny.

Curry also says the paper “provides a very different view from” a study featured last month by the New York Times whose lead author says that by 2047, give or take five years, “the coldest year in the future will be warmer than the hottest year in the past.”

We don’t expect the alarmists to look into this “very different view.” They’ve decided that humans are warming the planet by burning fossil fuels and any evidence to the contrary is dismissed.

But they can’t really believe anything else, can they? If they did, they would lose their justification for meddling in private affairs. And that, not the environment, is what the global warming scare is really all about.

--------

Obamas ‘Social Cost of Carbon’ Is at Odds with Science

`

By Paul C. “Chip” Knappenberger

article appeared on Investor’s Business Daily (Online) on October 28, 2013.

The Obama administration’s latest, greatest weapon to prosecute its war on global warming is something called the “social cost of carbon.” It is an instrument honed on ill-founded climate alarm and is readily blunted in the face of the best science.

The social cost of carbon is supposed to be a complete accounting of the market externalizes, both positive and negative, resulting from carbon dioxide emissions released by burning fossil fuels like coal, oil, or natural gas.

“In its pursuit of a high social cost of carbon the administration is going further down a path that is divergent from the one paved by the very best science on the issue.” But as its name implies, the government’s accounting of the social cost of carbon focuses almost entirely on conjured “costs” while ignoring proven “benefits” of carbon dioxide emissions.

The administration has determined that each ton of carbon dioxide emitted into the atmosphere today from human activities leads to a future cost to global society of about $40 (in today’s dollars).

This assigned $40 premium on carbon dioxide emissions proves to be a powerful sword that the administration has been wielding to slash the apparent costs of a flurry of proposed new rules and regulations aimed at reducing emissions.

Here’s how it works: any new proposed regulation viewed as reducing future carbon dioxide emissions gets a cost credit for each ton of reduced emissions equivalent to the value of the social cost of carbon. That credit is then used to offset the true costs.

In this way, all qualifying rules and regulations, including the EPA’s promised emissions limits on new and existing power plants, appear less costly - a critical asset, as costs are often the greatest barrier to approval.

Since the war on global warming is a high priority within the Obama administration, finding ways to make the social cost of carbon appear to be as high as possible is the ongoing objective.

Back in May, the administration increased its previous estimate by more than 50%, from $25 to $40, which means that all proposed carbon dioxide emissions cuts are now some 50% more valuable.

But that increase doesn’t jibe with science. In fact, in its pursuit of a high social cost of carbon the administration is going further down a path that is divergent from the one paved by the very best science on the issue.

For example, its new estimate completely ignores a large and growing body of scientific evidence that suggests that the Earth’s climate is much less sensitive to carbon dioxide emissions than the administration assumed.

This means that carbon dioxide emissions result in less global warming, less sea level rise, and less overall climate impact than previously thought.

In other words, the social “costs” that may result from human-caused climate change are substantially, some 33%, less than those in the administration’s calculation.

Another important point largely ignored by the current administration is that there are “benefits” in addition to the “costs” of carbon dioxide emissions.

One of the key benefits is enhanced global food production. Carbon dioxide is a fertilizer for plants, and more carbon dioxide in the atmosphere means stronger, healthier, and more productive vegetation, including most crops - a fact established by literally thousands of scientific studies.

A new analysis by Dr. Craig Idso of the Center for the Study of Carbon Dioxide and Global Change estimates that over the last 50 years, the value of global food production has increased by $3.2 trillion as a result of our carbon dioxide emissions.

The handling of this large, significant and proven benefit from carbon dioxide is grossly deficient in the administration’s accounting of the social cost of carbon.

Had the new science on the Earth’s climate sensitivity to carbon dioxide, the science on carbon dioxide’s role as a plant fertilizer, and other critical issues not been largely ignored, the administration’s latest estimate of the social cost of carbon would have dropped to near zero, or perhaps actually become negative.

A proper accounting of the social cost of carbon would have sheathed the administration’s blade, or even turned it upon itself, as Congress and the American people would see the true cost of the regulations being imposed on them, and the president’s climate war effort would be eviscerated for lack of alarm.

Oct 31, 2013

US citizens pay for “solar school” foolishness

Steve Goreham

Originally published in The Washington Times. Reprinted with permission of author

Solar systems are being installed at hundreds of schools across the United States. Educators use solar panels to teach students about the “miracle” of energy sourced from the sun. But a closer look at these projects shows poor economics and a big bill for citizens.

Earlier this month, the National Resources Defense Fund (NRDC) launched its “Solar Schools” campaign, an effort to raise $54,000 to help “three to five to-be-determined schools move forward with solar rooftop projects.” The NRDC wants to “help every school in the country go solar.” The campaign uses a cute video featuring kids talking about how we’re “polluting the Earth with gas and coal” and how we can save the planet with solar.

Wisconsin is a leader in the solar school effort. More than 50 Wisconsin high schools have installed solar panels since 1996 as part of the SolarWise program sponsored by Wisconsin Public Service (WPS), a state utility. The program solicits donations and provides funds to schools to install photovoltaic solar systems. The WPS website praises the program, stating, “The best way to leave a healthy planet for future generations is by teaching young people to become good stewards of the environment.”

But one has to question the utility of solar panels in Wisconsin, a state beset by low sunlight levels and ample winter snowfall. Last summer, solar panels were installed at Mishicot High School, the 50th school in the SolarWise program, at a cost of $30,000. The panels save the school about $300 per year in electric bills. With a 100 year payback, this system would never be installed by anyone seeking an economic return on investment. Are they teaching economics at Mishicot High?

In Illinois, Lake Zurich Middle School South installed a five-panel one-kilowatt photovoltaic system in August. The Lake Zurich Courier provided the headline, “Lake Zurich, Vernon Hills schools save with solar power.” The panels will save the school a little over $100 per year in electricity charges at a system cost of almost $9,000, a project payback of more than 70 years.

While the school may be saving, Illinois citizens are paying. Ninety percent of project funding came from the Illinois Clean Energy Community Foundation, which was established by a $225 million grant from Commonwealth Edison in 1999, provided from the electricity bills of Illinois citizens.

In Southwest Florida, 90 schools are installing 5 to 10 kilowatt solar arrays to “reduce energy costs and provide a learning opportunity” as part of Florida Power and Light’s “Solar for Schools” initiative. Panels cost from $50,000 to $80,000 and save electricity worth about $600 to $1,000 per year, depending upon the size of the system. With a 70 to 80 year payback, these projects will never pay off, because solar cells need to be replaced after 25 years of operation. Will they teach that to the kids? The program is funded from an energy conservation fee on customer electricity bills.

Solar energy is dilute. When the sun is directly overhead on a clear day, about 1,000 watts of sunshine reaches each square meter of Earth’s surface at the equator after absorption and scattering by the atmosphere. For the southern US, this is reduced to about 800 watts per square meter, since the angle of the sunlight is not quite perpendicular. Solar cells convert about 15 percent of the energy to electricity, meaning that only a single 100 watt bulb can be powered from every card-table-sized surface area of a solar panel, and only at noon on a clear day.

Los Angeles Community College (LACC) adopted solar energy in a big way. One of seven LACC solar systems is the Northwest Parking Lot Solar Farm, installed in 2008. The farm was purchased at a price of $10 million to produce about one megawatt of rated power, a price more than five times the cost of a commercial wind turbine farm on a per-megawatt basis. LACC spent a whopping $33 million to reduce electricity bills by only $600,000 per year. The total cost, including government subsidies, was $44 million to California taxpayers.

Solar energy has excellent uses, such as powering call boxes along highways, or swimming pool heating. But it’s trivial in our overall energy picture. Despite 20 years, billions in state and federal subsidies, and warm, happy solar school programs, solar provided only 1.1 percent of US electricity and only 0.2 percent of US energy in 2012.

Suppose our schools get back to the study of physics and economics and drop the “solar will save the planet” ideology?

Steve Goreham is Executive Director of the Climate Science Coalition of America and author of the book The Mad, Mad, Mad World of Climatism: Mankind and Climate Change Mania.

Oct 28, 2013

European Economic Stability Threatened By Renewable Energy Subsidies

By James Conca

ICECAP NOTE: See more stories about the health hazards, other dangers and the greed politicization of the renewables in this newsletter.

The stability of Europe’s electricity generation is at risk from the warped market structure caused by skyrocketing renewable energy subsidies that have swarmed across the continent over the last decade.

This sentiment was echoed a week ago by the CEOs of Europe’s largest energy companies, who produce almost half of Europe’s electricity. This group joined voices calling for an end to subsidies for wind and solar power, saying the subsidies have led to unacceptably high utility bills for residences and businesses, and even risk causing continent-wide blackouts (Geraldine Amiel WSJ).

The group includes Germany’s E.ON AG, France’s GDF Suez SA and Italy’s Eni SpA, and they unanimously pointed the finger at European governments’ poorly thought out decision at the turn of the millennium to promote renewable energy by any means.

The plan seemed like a good one in the late 1990s as a way to reverse Europe’s reliance on imported fossil fuels, particularly from Russia and the Middle East. But it seems the execution hasn’t matched the good intentions, and the authors of the legislations didn’t understand the markets.

“The importance of renewables has become a threat to the continent’s supply safety,” warned senior global energy analyst, Colette Lewiner, referring to a recent report by a Europe energy firm, Capgemini.

“We’ve failed on all accounts: Europe is threatened by a blackout like in New York a few years ago, prices are shooting up higher, and our carbon emissions keep increasing,” said GDF Suez CEO Gerard Mestrallet ahead of the news conference.

Under these subsidy programs, wind and solar power producers get priority access to the grid and are guaranteed high prices. In France, nuclear power wholesales for about 40Euros/MWhr ($54/MWhr), but electricity generated from wind turbines is guaranteed at 83 Euros/MWhr ($112/MWhr), regardless of demand. Customers have to pick up the difference.

The subsidies enticed enough investors into wind and solar that Germany now has almost 60,000 MWs of wind and solar capacity, or about 25% of that nation’s total capacity. Sounds good for the Planet.

The problems began when the global economic meltdown occurred in 2008. Demand for electricity fell throughout Europe, as it did in America, which deflated wholesale electricity prices. However, investors kept plowing money into new wind and solar power because of the guaranteed prices for renewable energy.

Meanwhile, electricity prices have been rising in Europe since 2008, just under 20% for households and just over 20% for businesses, according to Eurostat.

Since renewable capacity kept rising and was forced to be taken, utilities across Europe began closing fossil-fuel power plants that were now less profitable because of the subsidies, including over 50 GWs of gas-fired plants, Mr. Mestrallet said.

I’m a little confused, isn’t gas supposed to be the savior along with renewables? You can’t have a lot of renewables without back-up gas to buffer the intermittency of renewables since gas is the only one you can turn on and off like a light switch.

I understand that Germany is building new coal plants that can ramp up and down faster than ever before, but the replacement of so much gas with renewables means Europe may not be able to respond to dramatic weather effects, like an unusually cold winter when wind and solar can’t produce much.

Exhaust plumes rise from the new Neurath lignit coal-fired power station at Grevenbroich near Aachen, southern Germany. RWE, one of Germany’s major energy provider, invested in new coal conducted power plants that will buffer wind energy as well as replace reliable base-load nuclear. The wisdom of this decision remains to be seen. (Image credit: AFP/Getty Images via daylife)

In a warped parody of free market economics, some countries are building gas-fired plants along their borders to fill this void in rapid-ramping capacity, and that scares the markets even more, since gas is so expensive in Europe, that the price for electricity will climb even higher (EDEM/ESGM).

As the European Commission meets this week to discuss the issue, a parallel threat looms in America as a result of a similarly well-intentioned maze of mandates and subsidies over the last decade. It has been kept at bay only by our much larger energy production and our newly abundant cheap natural gas.

Americans may not be aware that natural gas is not cheap in Europe like it is in America. America’s gas boom has occurred in the absence of a natural gas liquefying infrastructure, which is needed for import/export of natural gas to the world markets. Thus, the more expensive global prices do not affect the price in the U.S.

Yet.

But that will change. We’re building LNG infrastructure at an amazing pace to exploit the huge gas reserves laid bare by advances in fracking technologies. Within five years, the U.S. will be the major player in the world gas market. Of course, gas prices will double or triple in the U.S. because, like oil, the price will now be set by the global market, not by the U.S. market. And like oil, it doesn’t matter how much you produce in your own country, you pay the global price. Period. Just ask Norway.

So when natural gas prices double, what happens to the price of electricity since gas is so intimately married to renewables? State mandates and renewable production tax credits will still require us to buy renewable energy, even if it’s double the price. We’ve already seen this occur here in the Pacific Northwest in battle between expensive wind and inexpensive hydro (Hydro Takes A Dive For Wind). Hydro lost.

That’s fine when gas is cheap. It won’t be fine when gas is expensive.

See also this report.

Oct 02, 2013

U.S. justices to hear challenge to Obama on climate change

By Anthony Watts

U.S. justices to hear challenge to Obama on climate change

Tue, Oct 15 11:06 AM EDT

By Lawrence Hurley

WASHINGTON (Reuters) In a blow to the Obama administration, the Supreme Court on Tuesday agreed to hear a challenge to part of the U.S. Environmental Protection Agency’s first wave of regulations aimed at tackling climate change.

By agreeing to hear a single question of the many presented by nine different petitioners, the court set up its biggest environmental dispute since 2007.

That question is whether the EPA correctly determined that its decision to regulate greenhouse gas emissions from motor vehicles necessarily required it also to regulate emissions from stationary sources.

The EPA regulations are among President Barack Obama’s most significant measures to address climate change. The U.S. Senate in 2010 scuttled his effort to pass a federal law that would, among other things, have set a cap on greenhouse gas emissions.

--------

Mike Stopa, running for congress is a graduate of Suffield Academy and holds an undergraduate degree in Astronomy from Wesleyan University and a Ph. D. in Physics from the University of Maryland. Mike teaches in the Physics Department at Harvard University, where he has served as Research Physicist and Senior Computational Physicist since 2004. He specializes in computation and nanoscience. He is the director of the National Nanotechnology Infrastructure Network Computation Project and a recognized expert on nanoscale electronics and computation. He has over 75 publications and over a thousand citations to his work in the physics literature.

---------

Climate Policies Lock Chains on Developing Nations

By Steve Goreham

Originally published in The Washington Times

As part of his climate change initiative announced in June, President Obama declared, “Today I’m calling for an end of public financing for new coal plants overseas unless they deploy carbon capture technologies, or there’s no other viable way for the poorest countries to generate electricity.” Restrictions on financing will reduce the supply and increase the cost of electrical power in developing nations, prolonging global poverty.

The World Bank has followed President Obama’s lead, announcing a shift in policy in July, stating that they will provide, “financial support for greenfield coal power generation projects only in rare circumstances.” The bank has provided hundreds of millions in funding for decades to coal-fired projects throughout the developing world.

Also in July, the Export-Import Bank denied financing for the proposed Thai Binh Two coal-fired power plant in Vietnam after “careful environmental review.” While 98 percent of the population of Vietnam has access to electricity, Vietnamese consume only about 1,100 kilowatt-hours per person per year, about one-twelfth of United States usage. Electricity consumption grew 34 percent in Vietnam from 2008 to 2011. The nation needs more power and international funds for coal-fired power projects. But western ideologues try to prevent Vietnam from using coal.

The President, the World Bank, and the Export-Import Bank have accepted the ideology of Climatism, the belief that mankind is causing dangerous climate change. By restricting loans to poor nations, they hope to stop the planet from warming. But what is certain is that their new policies will raise the cost of electricity in poor nations and prolong global poverty.

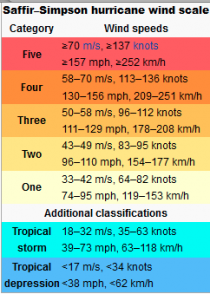

In most markets, coal is the lowest-cost fuel for producing electricity. According to the International Energy Agency (IEA), world coal and peat usage increased from 24.6 percent of the world’s primary energy supply in 1973 to 28.8 percent of supply in 2011. By comparison, electricity generated from wind and solar sources supplied less that 1 percent of global needs in 2011.

The cost of electricity from natural gas rivals that of coal in the United States, thanks to the hydrofracturing revolution. But natural gas remains a regional fuel. Natural gas prices in Europe are double those in the US and prices in Japan are triple US prices. Until the fracking revolution spreads across the world, the lowest cost fuel for electricity remains coal.

Despite our President’s endorsement, carbon capture technologies are far from a proven solution for electrical power. According the US Department of Energy, carbon capture adds 70 percent to the cost of electricity. In addition, huge quantities of captured carbon dioxide must be transported and stored underground, adding additional cost. There are no utilities currently using carbon capture on a commercial scale.

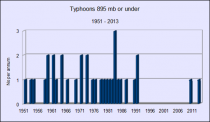

Coal usage continues to grow. Global coal consumption grew 2.5 percent from 2011 to 2012, the fastest growing hydrocarbon fuel. In 2011, coal was the primary fuel for electricity production in Poland (95%), South Africa (93%), India (86%), China (84%), Australia (72%), Germany (47%), the United States (45%), and Korea (44%). Should we now forbid coal usage in developing nations?

Enlarged

President Obama has stated, “...countries like China and Germany are going all in in the race for clean energy.” But China and Germany are huge coal users and usage is increasing in both nations. More than 50 percent of German electricity now comes from coal as coal fills the gap from closing nuclear plants. Today, China consumes more than 45 percent of the world’s total coal production.

ICECAP NOTE: While the enviro push for renewable wind and solar has caused electricity prices to skyrocket (double) and have 300,000 homes in Germany without electricity because they can’t afford to pay the price. That was in the winter, one of the worst in a century. Are you ready for your electricity prices and gasoline to double as the Obama administration has promised? That on top of the doubling of your cost for health care that the demagogue party has forced on us while promising lower cost and we could keep our doctor. All this for a failed theory that CO2 will have catastrophic effects when there has been none for 17 years as CO2 has increased 1%.

Today, more than 1.2 billion people do not have access to electricity. Hundreds of millions of others struggle with unreliable power. Power outages interrupt factory production, students walk to airports to read under the lights, and schools and hospitals lack vital electrical power.

Electricity is the foundation of a modern industrialized nation. Lack of electricity means poverty, disease, and shortened lifespans. Foolish climate policies lock chains on developing nations.

Steve Goreham is Executive Director of the Climate Science Coalition of America and author of the new book The Mad, Mad, Mad World of Climatism: Mankind and Climate Change Mania.

|