Sep 02, 2010

The Poisoned Chalice

Terence Caldwell

Australians are fortunate that they can freely choose whoever they wish to support them in the parliament and to govern this once great country.

This choice is usually made on the basis that the voter has studied his party’s choice and is WELL AWARE of their policies and what that party stands for. But I find it hard to believe that the people who voted for the Greens are aware of all their policies.

So why did you vote for the Greens ?

Was it as one voter said ‘I thought they were nice people.’ Another said it was ‘because they care about the environment.’ As if no one else does.!!!!

Perhaps you were disenchanted by the major parties and thought you would give your vote to the greens instead, without even knowing their policies or how dangerous they are. I find it impossible to believe that anyone in their right mind would vote for the Greens if they knew the truth. Instead of all the lies they have told and policies that would put the fear into the devil.

Did you know they want to introduce death duties. That horrible archaic tax from the past that has caused an overwhelming amount of misery and grief to so many families. Rich and poor, it makes no difference. Everything is frozen till the tax man gets his share. In the mean time families are torn apart.

They want to allow the boat people and other so called ‘environmental refugees’ free access to Australia without any limitations or health and security checks within two weeks of arrival. Allowing them to live freely in our country, and worse to give them financial aid and housing.

The flood of millions from Asia and everywhere else would totally devastate our country and terrorists would be free to attack us from within the country , tearing us apart from the inside. They want to increase taxes on large and small businesses by another 5%. As if small businesses are not already hanging on by their fingertips desperately trying to survive through a global financial crisis and the painfully slow recovery. This could be a possible death blow to many small businesses.

They will demand farmers “remove as far as possible” all genetically modified crops, which includes cotton worth $1.3 billion a year.

They want to end “the mining and export of uranium”, worth $900 billion a year.

They want to introduce a carbon tax on everything destroying whatever businesses that survive their other holocausts and force the cost of electricity (if we still have any) and other products through the roof.

They intend to lift foreign aid to a minimum of 0.7 per cent of GDP, which means an instant rise in handouts to their fanatics overseas of $4 billion a year. This is partly due to the fact that their Copenhagen handout of seven thousand million dollars a year, thankfully failed, and this is another way of getting that money

They want to close down all coal mines in Australia and wipe out an industry that brings to the Australian people over $64 billion a year to help pay for our hospitals, road, schools, family and child care, pensions, national defence, etc. etc.

Not only that but it would completely alienate our trade relationship with China, the backbone of our export economy. Without China purchasing our resources our economy would be in total collapse and so would this country.

They want to close down all coal fired power stations and replace them with “Renewable energy”. Which might be O.K. if it was at all feasible but it is TOTALLY IMPOSSIBLE to even achieve 5% renewable energy. Let alone 100% renewable energy.

It has been tried over the last thirty years or more in many countries with the resultant abject failure.

California has gone bankrupt trying with over 14000 wind generator failures and a return to thermal power generation. The total ‘renewable energy’ in California after 31 years is 2.3% and falling.

Spain is also bankrupt because of their renewable energy fiasco and are importing power. The cost of which has doubled and they now have an unemployment rate of over 20%.

Germany has over 7500 wind generators of which 2500 failed last year and they have returned to Thermal power which saved them last winter from total collapse.

With Denmark’s 5000 wind generators of which 2000 failed last year they are yet to reduce so called ‘CO2 emissions.’ By one gram and like the other countries rely on thermal power generation for backup.

The resultant failures in various countries are always the same from many countries but the Greens flatly refuse to see the failures because they want to bring Australia to its knees and under their communist globalisation control.

They have NO interest in our well being, only in their communist ideologies and are determined to destroy our economy.

Under that very thin cover of green is a very bright red of the communist, lead by the fanatical Bob Brown, who is a liar and one of the worst perpetrators of this world scam and he wants total control.

If the extremists called ‘greens’ should bring their policies to fruition it will destroy our economy and our country.

To the point it could bring about an extremely violent reaction from the Australian people when they finally realise that the Greens are deliberately trying to tear our country apart.

By that time it could be too late. The only consolation would be that every Green would pay severely for their treachery.

Now that you have read their policies, and this is not all of them. Are you still proud you voted for the greens. Will you stand up and accept the blame when this country is devastated for this communist agenda.

Or will you do the right thing by your country and help get rid of these destructive vandals and traitors.

When you voted for the greens you were given a Poison Chalice that we may never recover from. Remember that 173 people were burnt to death because of ‘greens’ policies forced on councils who were too weak to deny them.

Also the destruction of over 300 homes and the displacement of over 7000 people. The Greens have NOT been responsible for even one environmental improvement. They are a farce and liars.

If Australia could reduce its total CO2 emissions (a harmless gas essential to all life) by 20% as they want to, it would make a difference of .00000016% or 16 parts per ten millionth to the increase of CO2 in air per year. NOT even measurable.

Following are two similar reports and I urge you to read them and realise this is not some ‘Wild story’. Our future is now in your hands. You are the ones who MUST REJECT the greens and do everything to revoke your vote and stop the communist greens before it is too late.

Sep 01, 2010

Covering up for George Soros

By Ed Lasky

The sinister, omnipresent moneybags of the American left, George Soros, knows that distraction and misdirection make for a good defense. So do his many lackeys and sympathizers in the American media.

Recently, the left has built up two conservative billionaire brothers as their latest bogeymen. I am referring to the libertarians Charles and David Koch, who fund, among other groups, Americans for Prosperity. First Barack Obama lambasted them, and his minions in the media dutifully followed. Jane Mayer’s 10,000-word article in the New Yorker, titled “Covert Operations: the billionaire brothers who are waging a war against Obama,” has been widely cited in other liberal media.

In reality, the brothers have long funded a variety of causes years before anyone had ever heard of Obama. Regardless, Mayer’s article was criticized as shameful by others, including a trenchant bit of criticism by Mark Hemingway in the Washington Examiner. One of Hemingway’s points was right on target: Mayer’s barely visible coverage of George Soros, sugar daddy of the Democratic Party and an early, ardent and generous supporter of Barack Obama. Hemingway excerpts a paragraph from Mayer’s article and notes some omissions:

But this passage from Mayer’s piece is also worth noting, as a measure of the article’s bias:

Of course, Democrats give money, too. Their most prominent donor, the financier George Soros, runs a foundation, the Open Society Institute, that has spent as much as a hundred million dollars a year in America. Soros has also made generous private contributions to various Democratic campaigns, including Obama’s. But Michael Vachon, his spokesman, argued that Soros’s giving is transparent, and that “none of his contributions are in the service of his own economic interests.”

The idea that Soros’ giving is transparent is laughable—he’s given millions to the Tides Foundation, a byzantine organization notorious for obscuring finding sources on the left ... Further, Soros was very influential in setting up the Center for American Progress think tank and many other liberal organizations in the last decade. If any billionaire has waged war against a president recently, it’s Soros’ campaign against Bush. To dismiss any concerns about Soros’ political spending while saying that the Koch brothers are at the center of a dark conspiracy is absurd.

Mayer just let the claim that Soros has no monetary interest when he gives money stand unchallenged—and that was shameful. Where was the famed New Yorker fact-checking department? Did they get laid off?

Let me expand on Hemingway’s commentary, in light of the view that somehow Soros’s giving has zero to do with his financial interest—or, as his spokesman spins, “none of the contributions are in the service of his economic interests.” New York Times theatre critic turned frothing attack dog columnist of the left Frank Rich has started promoting this theme: Soros is Santa Claus or Mother Theresa. This Sunday, Rich had his typical invective-filled column—also railing against the Kochs—and then gave us this whopper:

Soros is a publicity hound who is transparent about where he shovels his money and “like many liberals—selflessly or foolishly, depending on your point of view—he supports causes that are unrelated to his business interests.”

What planet do Mayer and Rich live on? Soros obviously has his financial interests in mind when he gives, and he knows how to use his billions to make more billions by tapping his friends in high places in the Democratic Party.

For example, Soros has made a boatload of money off his huge investment in the Brazilian oil company, Petrobras, a company that has benefited mightily from its deep offshore oil reserves. Barack Obama had the U.S. Export-Import bank extend billions of dollars of loans to underwrite Petrobras’s offshore oil development. Soros positioned himself to reap big gains just days before his pal in the White House pushed for billions in loans to Petrobras—a company from a country that can certainly tap the financial markets on its own to raise funds to tap oil off its shores. The company did not need easy money from American taxpayers. Yet there was Soros, who somehow was prescient enough to roll the loaded dice in taking a major stake in Petorbras. He got a double-dip type of return when Barack ("never let a crisis go to waste") Obama shut down deep-water oil exploration off America’s own energy-rich coasts—further enriching the prospects for Petrobras and George Soros.

Strike one.

Soros’s pet think-tank, the Center for American Progress, constantly pushes green schemes. Democratic politicians are on board, as well. This group includes Barack Obama who, runs after one electric battery, solar power plant, and windmill after another (when he is not on the links or listening to live music at the club he created in the East Room of the White House). How generous have Obama and the Democrats been to the green schemers? The grand champion of budget-busting departments has been the “Energy Efficiency and Renewable Program,” which received $1.7 billion in 2008 and $16.8 billion in 2009, a 1,014% increase in just one year. Media reports over the past year or so have tied numerous Democratic donors to these “ventures.” They have been richly rewarded with taxpayer dollars.

What a great scheme! Give thousands to Obama and various Democrats and get billions back in our taxpayer dollars. Who is a big investor in “clean energy,” by the way? Why, none other than George Soros, who announced back in October 2009 that he would invest at least $1 billion in “clean energy.” The Center for American Progress is closely tied to the Obama administration (see “Soros-Funded Democratic Idea Factory Becomes Obama Policy Font") and serves as its hiring hall, not to mention as the fourth or fifth branch of government (or so it seems—I have the Center for American Progress as a Google search term, and the employees of that tank are all over the media landscape, as well as D.C.). Soros knows how to use leverage, and the millions he put into the Center for American Progress (and into the election of Barack Obama and other leftist Democrats) will reap big returns—at our expense—in the years ahead.

Strike two.

Whatever happened to all the hullaballoo regarding hedge funds? Back in 2008 and early 2009, Democrats were busy blaming Wall Street, hedge funds, and Republicans for the financial crisis. We were promised that hedge funds would be regulated to the point of harmlessness, that their investors would have to be disclosed, their positions monitored, their leverage controlled. What happened to those promises? Well, that did not suit hedge fund managers—not at all. So the promises went away.

And who was one of the biggest hedge fund titans out there? Why, it happens to be none other than George Soros, who made billions in 2008 from the financial and housing collapse and then made billions more in 2009 as the Democrats bailed out Wall Street. Who was the major beneficiary of hedge fund campaign money in 2008? Barack Obama, eclipsing the long-time champ in this area, Christopher Dodd, the Senator from Connecticut (Hedgefundland) who chaired the Senate Banking Committee. In 2008, I noted that Obama was “The Hedge Fund Candidate.”

Obama, Dodd, and fellow Democrats just forgot that crusade against hedge funds, and Soros continues to rake in billions. George Soros—who is the number-one funder of so-called 527 groups (such as MoveOn.Org)—gets his money’s worth whenever and wherever he puts it to work. He finds his best leverage in the Democratic Party.

Strike three.

And a bonus pitch.

I have written quite a bit about the riches we have in America in the form of shale gas. Soros has investments in the energy industry that would be harmed if our cheap and plentiful reserves were tapped to their full extent. Among his holdings are a huge one in InterOil that has big reserves of natural gas in New Guinea. Democrats are now trying to shut down our shale gas industry by attacking “fracking”—a method that is used to extract the gas from the shale rock that holds it. There is plenty of evidence that fracking is safe and sound—it has been used for many years. Nevertheless, the industry is under attack by Democrats in Congress such as Ed Markey, by Obama’s EPA, by the Center for American Progress, by Pro Publica—an outfit created and funded by Soros pals Herbert and Marion Sandler—and recently by MoveOn.org. Soros must be getting desperate, as Americans crave cheap natural gas, to bring in MoveOn.org, which has heretofore focused on the purely political sport of bashing Republicans and electing as many left-wingers as it could—including, of course, the biggest of them all, Barack Obama.

One could go on. Soros is an enterprising man and legendary investor. He figured out sooner and better than anyone else how to buy political power and bend politicians to his will. He is not a goody-two-shoes, as partisans on the left try to portray him. He has benefited hugely from leverage, and the best leverage he enjoys is when he “gives” money in ways that are really investments (payoffs, bribes?) in disguise. Shame on Mayer, Rich, and others who hide this history. They also are all but puppets in the hands of George Soros. Post is here.

Ed Lasky is news editor of American Thinker

Sep 01, 2010

Johnson’s stance is the correct one

Letter to the Milwaukee Journal Sentinel Online

As scientists, we write to support U.S. Senate candidate Ron Johnson’s correct view on natural factors of climate change as reported in the Journal Sentinel (Page 1B, Aug. 17; Page 1A, Aug. 21).

This is not a debate about politics or about a belief system. Objective science informs us that the so-called consensus viewpoints offered by the United Nations Intergovernmental Panel on Climate Change about man-made carbon dioxide being the dominant factor of climate change is largely a political conclusion and not likely a scientifically correct one.

If global temperatures are supposedly affected by rapidly rising atmospheric CO2 concentrations but the warming has ceased over at least the last decade, then something more important than atmospheric CO2 must be driving climate change. This is why the National Oceanic and Atmospheric Administration reported Aug. 13 that “greenhouse gas forcing fails to explain the 2010 heat wave over western Russia.”

We wish to emphasize that many peer-reviewed publications exist that support the minimal role of atmospheric CO2 as a cause for the weather and climate that we experience. Extremist views only serve to induce panic about climate change, and the unwillingness to address the real science only leads to spurious claims that “the science is settled.”

For example, a paper published Aug. 19 in Geophysical Research Letters by a scientist from the California Institute of Technology shows that even the apparently drastic decrease of summer-autumn Arctic sea ice is not unprecedented but merely an effect of Arctic Ocean geography.

We therefore applaud Senate candidate Ron Johnson’s stance against CO2-induced climate change.

Eight scientists signed this letter; they noted that their university affiliations are for identification purposes only and are not an endorsement of the letter. The signers are: Willie Soon, Harvard-Smithsonian Center for Astrophysics; Scott Armstrong, Wharton School at University of Pennsylvania; Robert Carter, James Cook University, Australia; Susan Crockford, University of Victoria, Canada; Kesten Green, University of South Australia; Nils-Axel Morner, Stockholm University, Sweden; George Taylor, Oregon State University, retired; and Mitchell Taylor, Lakehead University, Canada.

Aug 31, 2010

How Harvard’s Code of Ethics Differs from UVA and Penn State with respect to Michael Mann

By Charles Battig, MD

An insight into the apparent difference in how “scientific misconduct” at Harvard University is handled, and how it has been handled at Penn State and the University of Virginia in the matter of climatologist Michael Mann is now available.

Harvard professor of psychology Marc Hauser was found “solely responsible for eight instances of scientific misconduct” involving the “data acquisition, data analysis, data retention, and the reporting of research methodologies and results” according to the August 20, 2010 statement by Harvard dean Michael D. Smith. This finding was issued based on a faculty investigating committee study. The report noted that it began with an “inquiry phase” in response to “allegations of scientific misconduct.” It seems that there were allegations of “monkey business” in his research on monkey cognition. Three papers by Hauser, presumably peer reviewed, will need to be corrected or retracted according to Dean Smith. The academic fate of the professor is yet to be decided.

In contrast, the two reviews of the behavior of climatologist Michael Mann at Penn State seemed primarily focused on his data housekeeping habits and openness to sharing his data and analysis methodology. He was found to have acted within the ‘accepted practices within the scientific community for proposing, conducting, or reporting research.” The issues of data acquisition and analysis validity were not pursued; the number of awards and publications Mann received was cited as evidence of the validity of his work.

At the University of Virginia an “inquiry phase”, such as noted in the Harvard protocol, was initiated by Virginia Attorney General Ken Cuccinelli into the possible misuse of public funds by Mann in his pursuit of employment by the University and his use of such funds in his research activities there. Virginia state law gives wide discretion to the AG in the initiation of investigations into suspected misuse of state funds. This request was met with claims of impingement on sacred academic freedom, and chilling the environment for academic research in general by the university and its various supporters. Rather than welcome the chance to dispel the suspicion of scientific misconduct and protect its academic reputation, the university enlisted a high powered D.C. legal team to fight the AG request in court.

While this legal process plays out, the court of public opinion must wonder why the openness and direct dealing with such allegations exhibited by Harvard is not the model for the University of Virginia. Harvard is shown to be a scientifically open and self policing university; UVa is hiding behind its self -righteous claims of academic freedom and legal barricades. Whose research will the public more likely trust?

Charles Battig, M.D.

President, Piedmont Chapter

Virginia Scientists and Engineers for Energy and Environment

Charlottesville, VA

Aug 31, 2010

11 Year Cycle in Hot Summers

By Joseph S. D’Aleo, CCM

We have seen hot summers in 1933, 1944, 1955, 1966, 1977, 1988, 1999, 2010. Notice a pattern? The years are 11 years apart.

This 11 year cycle may be a coincidence but if so a 1 in 256 chance one. In some years the heat was concentrated in one month (1966 it was July), in others it was throughout.

What else has an 11 year cycle? - the sun of course. The solar cycles average 11 years. When new solar cycles begin the new spots are in higher solar latitudes and gradually move equatorward. During transitions you typically have old cycle spots near the equator and new cycle spots at higher latitudes.

The 11 years above have been during these transitions. A coincidence? We’ll leave it to our solar expert readers to speculate whether this is solar driven and possible mechanisms. Other common elements in some of the years include an El Nino winter giving way to a La Nina summer and strong rebound from a very negative winter negative Arctic Oscillation (AO) pattern.

See so-called butterfly diagram with positions of sunspots by year enlarged here.

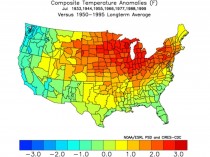

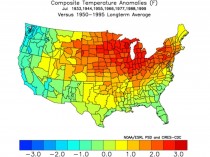

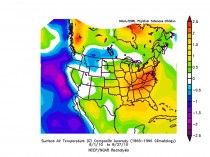

Compositing those years gives you this warm summer signal.

See enlarged here.

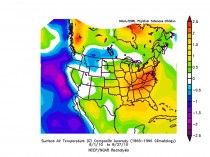

The actual anomalies through August 30 showed the warmth further south. This may be because of a continuation of strong high latitude blocking (negative NAO) as evidenced by the warmth in northeast Canada.

See enlarged here.

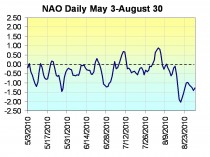

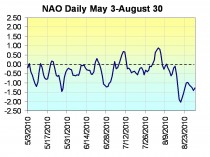

Indeed a plot of the daily NAO as obtained from NOAA CPC shows a predominant negative NAO. This forces everything else further south in North America and the Atlantic.

See enlarged here.

In July, a negative NAO means a hot southeast. By winter, it means cold.

A continuation of this blocking may make the upcoming La Nina winter more interesting. The winters tend to be cold in the west and north, warmer in the southeast. See more here.

|