Complexity of thousands of weather variables bedevils policy

See Tony Heller’s killer video here.

BOULDER, Colo. - For almost five years, an international consortium of scientists was chasing clouds, determined to solve a problem that bedeviled climate-change forecasts for a generation: How do these wisps of water vapor affect global warming?

They reworked 2.1 million lines of supercomputer code used to explore the future of climate change, adding more-intricate equations for clouds and hundreds of other improvements. They tested the equations, de- bugged them and tested again.

The scientists would find that even the best tools at hand can’t model climates with the sureness the world needs as rising temperatures impact almost every region.

When they ran the updated simulation in 2018, the conclusion jolted them: Earth’s atmosphere was much more sensitive to greenhouse gases than decades of previous models had predicted, and future temperatures could be much higher than feared- perhaps even beyond hope of practical remedy.

“We thought this was really strange,” said Gokhan Danabasoglu, chief scientist for the climate-model project at the Mesa Laboratory in Boulder at the National Center for Atmospheric Research, or NCAR. “If that number was correct, that was really bad news.”

At least 20 older climate models disagreed with the new one at NCAR, an open-source model called the Community Earth System Model 2, or CESM2, funded mainly by the U.S. National Science Foundation and arguably the world’s most influential. Then, one by one, a dozen climate-modeling groups around the world produced similar forecasts.

The scientists soon concluded their new calculations had been thrown off kilter by the physics of clouds in a warming world, which may amplify or damp climate change. “The old way is just wrong, we know that,” said Andrew Gettelman, a physicist at NCAR who specializes in clouds and helped develop the CESM2 model. “I think our higher sensitivity is wrong too. It’s probably a consequence of other things we did by making clouds better and more realistic. You solve one problem and create another.”

Since then the CESM2 scientists have been reworking their algorithms using a deluge of new information about the effects of rising temperatures to better understand the physics at work. They have abandoned their most extreme calculations of climate sensitivity, but their more recent projections of future global warming are still dire- and still in flux.

As world leaders consider how to limit greenhouse gases, they depend on what computer climate models predict. But as algorithms and the computer they run on become more powerful - able to crunch far more data and do better simulations - that very complexity has left climate scientists grappling with mismatches among competing computer models.

While vital to calculating ways to survive a warming world, climate models are hitting a wall. They are running up against the complexity of the physics involved; the limits of scientific computing; uncertainties around the nuances of climate behavior; and the challenge of keeping pace with rising levels of carbon dioxide, methane and other greenhouse gases. Despite significant improvements, the new models are still too imprecise to be taken at face value, which means climate- change projections still require judgment calls.

‘We have a situation where the models are behaving strangely,’ said Gavin Schmidt, director of the National Aeronautics and Space Administration’s Goddard Institute for Space Sciences, a leading center for climate modeling. “We have a conundrum.’

Policy tools

The United Nations Intergovernmental Panel on Climate Change collates the latest climate data drawn from thousands of scientific papers and dozens of climate models, including the CESM2 model, to set an international standard for evaluating the impacts of climate change. That provides policy makers in 195 countries with the most up-to-date scientific consensus related to global warming. Its next major advisory report, which will serve as a basis for international negotiations, is expected this year.

For climate modelers, the difference in projections amounts to a few degrees of average temperature change in response to levels of carbon dioxide added to the atmosphere in years ahead. A few degrees will be more than enough, most scientists say, to worsen storms, intensify rainfall, boost sea-level rise -and cause more-extreme heat waves, droughts and other temperature-related consequences such as crop failures and the spread of infectious diseases.

When world leaders in 1992 met in Rio de Janeiro to negotiate the first comprehensive global climate treaty, there were only four rudimentary models that could generate global-warming projections for treaty negotiators.

In November 2021, as leaders met in Glasgow to negotiate limits on greenhouse gases under the auspices of the 2015 Paris Accords, there were more than 100 major global climate-change models produced by 49 different research groups, reflecting an influx of people into the field. During the treaty meeting, U.N. experts presented climate-model projections of future global-warming scenarios, including data from the CESM2 model. “We’ve made these models into a tool to indicate what could happen to the world,” said Gerald Meehl, a senior scientist at the NCAR Mesa Laboratory. “This is information that policy makers can’t get any other way.”

The Royal Swedish Academy of Sciences in October awarded the Nobel Prize in Physics to scientists whose work laid the foundation for computer simulations of global climate change.

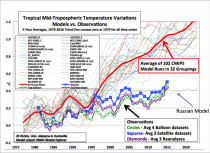

Skeptics have scoffed at climate models for decades, saying they overstate hazards. But a growing body of research shows many climate models have been uncannily accurate. For one recent study, scientists at NASA, the Breakthrough Institute in Berkeley, Calif., and the Massachusetts Institute of Technology evaluated 17 models used be-much tween 1970 and 2007 and found most predicted climate shifts were “indistinguishable from what actually occurred.”

Still, models remain prone to technical glitches and are hampered by an incomplete understanding of the variables that control how our planet responds to heat-trapping gases.

In its guidance to governments last year, the U.N. climate- change panel for the first time played down the most extreme forecasts. Before making new climate predictions for policy makers, an independent group of scientists used a technique called “hind-casting,” testing how well the models reproduced changes that occurred during the 20th century and earlier. Only models that re-created past climate behavior accurately were deemed acceptable.

In the process, the NCARconsortium scientists checked whether the advanced models could reproduce the climate during the last Ice Age, 21,000 years ago, when carbon-dioxide levels and temperatures were lower than today. CESM2 and other new models projected temperatures much colder than the geologic evidence indicated. University of Michigan scientists then tested the new models against the climate 50 million years ago when greenhouse-gas levels and temperatures were much higher than today. The new models projected higher temperatures than evidence suggested.

While accurate across almost all other climate factors, the new models seemed overly sensitive to changing carbon-dioxide levels and, for the past several years, scientists have been meticulously fine-tuning them to narrow the uncertainties.

Then there is the cloud conundrum. Because clouds can both reflect solar radiation into space and trap heat from Earth’s surface, they are among the biggest challenges for scientists honing climate models.

At any given time, clouds cover more than two-thirds of the planet. Their impact on climate depends on how reflective they are, how high they rise and whether it is day or night. They can accelerate warming or cool it down. They operate at a scale as broad as the ocean, as small as a hair’s width. Their behavior can be affected, studies show, by factors ranging from cosmic rays to ocean microbes, which emit sulfur particles that become the nuclei of water droplets or ice crystals.

“If you don’t get clouds right, everything is out of whack.” said Tapio Schneider, an atmospheric scientist at the California Institute of Technology and the Climate Modeling Alliance, which is developing an experimental model. ‘Clouds are crucially important for regulating Earth’s energy balance.”

Comparing models

Older models, which rely on simpler methods to model clouds’ effects, for decades asserted that doubling the atmosphere’s carbon dioxide over preindustrial levels would warm the world between 2.7 and 8 degrees Fahrenheit (1.5 and 4.5 degrees Celsius).

New models account for clouds’ physics in greater detail. CESM2 predicted that a doubling of carbon dioxide would cause warming of 9.5 degrees Fahrenheit (5.3 degrees Celsius) - almost a third higher than the previous version of their model, the consortium scientists said. In an independent assessment of 39 global-climate models last year, scientists found that 13 of the new models produced significantly higher estimates of the global temperatures caused by rising atmospheric levels of carbon dioxide than the older computer models - scientists called them the “wolf pack.” Weighed against historical evidence of temperature changes, those estimates were deemed unrealistic.

By adding far-more-detailed equations to simulate clouds, the scientists might have introduced small errors that could make their models less accurate than the cloud assumptions of older models, according to a study by NCAR scientists published in January 2021. Taking the uncertainties into account, the U.N.’s climate-change panel narrowed its estimate of climate sensitivity to a range between 4.5 and 7.2 degrees Fahrenheit (2.5 to 4 degrees Celsius) in its most recent report for policy makers last August.

Dr. Gettelman, who helped develop CESM2, and his colleagues in their initial upgrade added better ways to model polar ice caps and how carbon and nitrogen cycle through the environment. To make the ocean more realistic, they added wind-driven waves. Since releasing the open-source software in 2018, the NCAR scientists have updated the CESM2 model five times, with more improvements in development. “We are still digging,” said Jean-Francois Lamarque, director of NCAR’s climate and global dynamics laboratory, who was the project’s former chief scientist. “It is going to take quite a few years.”

The NCAR scientists in Boulder would like to delve more deeply into the behavior of clouds, ice sheets and aerosols, but they already are straining their five-year-old Cheyenne supercomputer, according to NCAR officials. A climate model able to capture the subtle effects of individual cloud systems, storms, regional wildfires and ocean currents at a more detailed scale would require a thousand times more computer power, they said.

“There is this balance between building in all the complexity we know and being able to run the model for hundreds of years multiple times,” said Andrew Wood, an NCAR scientist who works on the CESM2 model. “The more complex a model is, the slower it runs.”

Researchers now are under pressure to make reliable local forecasts of future climate changes so that municipal managers and regional planners can protect heavily populated locales from more extreme flooding, drought or wildfires. That means the next generation of climate models need to link rising temperatures on a global scale to changing conditions in a local forest, watershed, grassland or agricultural zone, said Jacquelyn Shuman, a forest ecologist at NCAR who is researching how to model the impact of climate change on regional wildfires. “Computer models that contain both large-scale and small-scale models allow you to really do experiments that you can’t do in the real world,” she said. “You can really ramp up the temperature, dial down the precipitation or completely change the amount of fire or lightning strikes that an area is seeing, so you can really diagnose how it all works together. That’s the next step. It would be very computationally expensive.”

The NCAR scientists are installing a new $40 million supercomputer named Derecho, built by Hewlett Packard Enter-prise designed to run climate-change calculations at three times the speed of their current machine. Once it becomes operational this year, it is expected to rank among the world’s top 25 or so fastest supercomputers, NCAR officials said.

Even the best tools can’t model climates with the sureness the world needs.

----------

With this in mind may I suggest “Looking out the Window: Are Humans Really Responsible for Changing Climate, The Trial of Carbon Dioxide in the Court of Public Opinion” by Bob Webster available on Amazon as a book and e-book.

It is well researched and written and presents data and details that should cause believers with an open mind to question the official version of the “science”.

From the Back Cover

“The hot dry seasons of the past few years have caused rapid disintegration of glaciers in Glacier National Park, Montana...Sperry Glacier...has lost one-quarter or perhaps one-third of its ice in the past 18 years...If this rapid rate should continue...the glacier would almost disappear in another 25 years...”

“Born about 4,000 years ago, the glaciers that are the chief attraction in Glacier National Park are shrinking so rapidly that a person who visited them ten or fifteen years ago would hardly recognize them today as the same ice masses.”

Do these reports sound familiar? Typical of frequent warnings of the dire consequences to be expected from global warming, such reports often claim modern civilization’s use of fossil fuels as being the dominant cause of recent climate warming.

You might be surprised to learn the reports above were made nearly thirty years apart! The first in 1923 prior to the record heat of the Dust Bowl years during the 1930s. The second in 1952 during the second decade of a four-decade cooling trend that had some scientists concerned that a new ice age might be on the horizon!

Did the remnants of Sperry Glacier disappear during global warming of the late 20th century?

According to the US Geological Survey (USGS), today Sperry Glacier “ranks as a moderately sized glacier” in Glacier National Park.

What caused the warmer global climate prior to “4,000 years ago” before Glacier National Park’s glaciers first appeared?

Are you aware that during 2019 the National Park Service quietly began removing its “Gone by 2020” signs from Glacier National Park as its most famous glaciers continued their renewed growth that began in 2010?

Was late 20th-century global warming caused by fossil fuel emissions? Was it really more pronounced than early 20th-century warming? Or was late 20th-century warming perfectly natural, in part a response to the concurrent peak strength of one of the strongest solar grand maxima in contemporary history?

These and other questions are addressed by “Looking Out the Window.”

Be a juror in the trial of carbon dioxide in the court of public opinion and let the evidence inform your verdict.”