My colleague David Wojick has written an important, perceptive article explaining how Google’s search engine algorithms so successfully exclude conservative, climate skeptic, and free market news and opinion from even specific inquiries. As David notes, during his test searches “Google never found a truly conservative (what it would call right wing) source” like Townhall or the Daily Caller. “It just doesn’t happen, and the algorithm clearly knows that, as does Google.”

For a supposed research and educational tool that commands 92.2% of all online inquiries, this is not just reprehensible. It is downright dangerous for a free, functioning, modern democratic society and world. It is especially unacceptable when that “search engine” uses a public internet system that was built by government agencies, using taxpayer dollars, for the purpose of ensuring the free flow of information and open, robust discussion of vital policy issues.

Thank you for posting David’s article, quoting from it, and forwarding it to your friends and colleagues.

Best regards, and have a wonderful Labor Day weekend,

Paul Dreissen

Google discriminates against conservatives and climate skeptics

We must understand how Google does it, why it is wrong and how it hurts America

David Wojick

Several months ago, Google quietly released a 32-page white paper, “How Google Fights Disinformation.” That sound good. The problem is that Google not only controls a whopping 92.2% of all online searches. It is a decidedly left-wing outfit, which views things like skepticism of climate alarmism, and conservative views generally, as “disinformation.” The white paper explains how Google’s search and news algorithms operate, to suppress what Google considers disinformation and wants to keep out of educational and public discussions.

The algorithms clearly favor liberal content when displaying search results. Generally speaking, they rank and present search results based on the use of so-called “authoritative sources.” The problem is, these sources are mostly “mainstream” media, which are almost entirely liberal.

Google’s algorithmic definition of “authoritative” makes liberals the voice of authority. Bigger is better, and the liberals have the most and biggest news outlets. The algorithms are very complex, but the basic idea is that the more other websites link to you, the greater your authority.

It is like saying a newspaper with more subscribers is more trustworthy than one with fewer subscribers. This actually makes no sense, but that is how it works with the news and in other domains. Popularity is not authority, but the algorithm is designed to see it that way.

This explains why the first page of search results for breaking news almost always consists of links to liberal outlets. There is absolutely no balance with conservative news sources. Given that roughly half of Americans are conservatives, Google’s liberal news bias is truly reprehensible.

In the realm of public policies affecting our energy, economy, jobs, national security, living standards and other critical issues, the suppression of alternative or skeptical voices, evidence and perspectives becomes positively dangerous for our nation and world.

Last year, I documented an extreme case of this bias the arena of “dangerous manmade global warming” alarmism. My individual searches on prominent skeptics of alarmist claims revealed that Google’s “authoritative source” was an obscure website called DeSmogBlog, whose claim to fame is posting nasty negative dossiers on skeptics, including me and several colleagues. (LINK to story about Desmogblog.com - read down to see John Lefebvre story - pleading guilty to federal money-laundering charges.)

In each search, several things immediately happened. First, Google linked to DeSmogBlog’s dossier on the skeptic, even though it might be a decade old and/or wildly inaccurate. Indeed, sometimes this was the first entry in the search results. Second, roughly half of the results were negative attacks - which should not be surprising, since the liberal press often attacks us skeptics.

Third, skeptics are often labeled as “funded by big oil,” whereas funding of alarmists by self-interested government agencies, renewable energy companies, far-left foundations or Tom Steyer (who became a billionaire by financing Asian coal mines) was generally ignored.

In stark contrast, searching for information about prominent climate alarmists yielded nothing but praise. This too is not surprising, since Google’s liberal “authoritative” sources love alarmists.

This algorithm’s bias against skeptics is breathtaking - and it extends to the climate change debate itself. Search results on nearly all climate issues are dominated by alarmist content.

In fact, climate change seems to get special algorithmic attention. Goggle’s special category of climate webpages, hyperbolically called “Your Money or Your Life,” requires even greater “authoritative” control in searches. No matter how well reasoned, articles questioning the dominance of human factors in climate change, the near-apocalyptic effects of predicted climate change, or the value and validity of climate models are routinely ignored by Google’s algorithms.

The algorithm also ignores the fact that our jobs, economy, financial wellbeing, living standards, and freedom to travel and heat or cool our homes would be severely and negatively affected by energy proposals justified in the name of preventing human-caused cataclysmic climate change. The monumental mining and raw material demands of wind turbines, solar panels, biofuels and batteries likewise merit little mention in Google searches. Ditto for the extensive impacts of these supposed “clean, green, renewable, sustainable” technologies on lands, habitats and wildlife.

It’s safe to say that climate change is now the world’s biggest single public policy issue. And yet Google simply downgrades and thus “shadow bans” any pages that contain “demonstrably inaccurate content or debunked conspiracy theories.” That is how alarmists describe skepticism about any climate alarm or renewable energy claims. Google does not explain how its algorithm makes these intrinsically subjective determinations as to whether an article is accurate, authoritative and thus posted - or incorrect, questionable and thus consigned to oblivion.

Google’s authority-based search algorithm is also rigged to favor liberal content over virtually all conservative content; it may be especially true for climate and energy topics. This deep liberal bias is fundamentally wrong and un-American, given Google’s central role in our lives.

Google’s creators get wealthy by controlling access to information - and thus thinking, debate, public policy decisions and our future - by using a public internet system that was built by defense and other government agencies, using taxpayer dollars, for the purpose of ensuring the free flow of information and open, robust discussion of vital policy issues. It was never meant to impose liberal-progressive-leftist police state restrictions on who gets to be heard.

According to its “How we fight disinformation” white paper, Google’s separate news search feature gets special algorithmic treatment - meaning that almost all links returned on the first page are to liberal news sources. This blatant bias stands out like a sore thumb in multiple tests. In no case involving the first ten links did I get more than one link to a conservative news source. Sometimes I got none.

For example, my news search on “Biden 2020” returned the following top ten search results, in this order: CNN, the New York Times, Vice, Politico, CNN again, Fortune, Vox, Fox News, The Hill and Politico. The only actual conservative source was Fox News, in eighth position.

Of course conservative content would not be friendly to Mr. Biden. But if Google can prominently post attacks on skeptics and conservatives, why can’t it do so for attacks on Democrats?

The highest conservative content I found was one link in eight or 12 percent. About a third of my sample cases had no conservative sources whatsoever. The average of around 7% measures Google’s dramatic bias in favor of liberal sources, greatly compounding its 92.2% dominance.

The lonely conservative sources are more middle of the road, like Fox News and the Washington Examiner. Google never found or highlighted a truly conservative (what it would call “right wing") source, like Brietbart, Townhall or the Daily Caller. It just doesn’t happen, and the algorithm clearly knows that, as does Google. As do other information and social media sites.

Of course, I’m not alone in finding or encountering this blatant viewpoint discrimination.

When coupled with the nearly complete takeover of UN, IPCC, World Bank and other global governance institutions by environmentalist and socialist forces - and their near-total exclusion of manmade climate chaos skeptics, free market-oriented economists and anyone who questions the role or impact of renewable energy - the effect on discussion, debate, education and informed decision-making is dictatorial and devastating.

No free, prosperous, modern society can survive under such conditions and restrictions. It’s time for citizens, legislators, regulators and judges to rein in and break up this imperious monopoly.

David Wojick is an independent analyst specializing in science, logic and human rights in public policy, and author of numerous articles on these topics.

Thanks to Tony Heller for this look back at July claims.

Thanks to Tony for this story on Texas fraudulent adjustments.

The Greatest Scientific Fraud Of All Time is the fraud committed by the keepers of official world temperature records, by which they intentionally adjust early year temperature records downward in order to support assertions that dangerous human-caused global warming is occurring and that the most recent year or month is the “hottest ever.” The assertions of dangerous human-caused global warming then form the necessary predicate for tens of billions of dollars of annual spending going to academic institutions; to the “climate science” industry; to wind, solar and other alternative energy projects; to electric cars; and on and on. In terms of real resources diverted from productive to unproductive activities based on falsehoods, this fraud dwarfs any other scientific fraud ever conceived in human history.

This is Part XXIV of my series on this topic. To read Parts I through XXIII, go to this link.

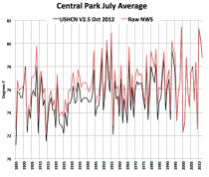

The previous posts in this series have mostly focused on particular weather stations, comparing the currently-reported temperature history for each station with previously-reported data. For example, the very first post in this series, from July 2013, looked at one of my favorite stations, the one located in Central Park in New York City. Somehow, the early-year temperatures reported for the month of July for that very prominent station had been substantially adjusted downward, thus notably enhancing a previously-slight warming trend:

Central Park July Average.png

Go through the various posts in this series to find dozens more of such examples.

But how exactly are these downward adjustments accomplished? Just what are the games that they are playing?

Close observers of this subject have long recognized that the principal issue is something called “homogenization.” The custodians of the temperature records - principally two U.S. government agencies called NOAA and NASA - are quite up-front in declaring that they engage in “homogenization” of the temperature data. Here is an explanation from NOAA justifying changes they have made in coming up with the latest version (version 4) of their world surface temperature series known as Global Historical Climate Network. Excerpt:

Nearly all weather stations undergo changes in the circumstances under which measurements are taken at some point during their history. For example, thermometers require periodic replacement or recalibration and measurement technology has evolved over time… “Fixed” land stations are sometime relocated and even minor temperature equipment moves can change the microclimate exposure of the instruments. In other cases, the land use or land cover in the vicinity of an observing site can change over time, which can impact the local environment that instruments are sampling even when measurement practice is stable. All of the these different modifications to the circumstances of recording near surface air temperature can cause systematic shifts in temperature readings from a station that are unrelated to any real variation in local weather and climate. Moreover, the magnitude of these shifts (or “inhomogeneities") can be large relative to true climate variability. Inhomogeneities can therefore lead to large systematic errors in the computation of climate trends and variability not only for individual station records, but also in spatial averages.

That sounds legitimate, doesn’t it? Of course, they completely slide over the fact that “homogenization” means that essentially all temperatures as now reported have been adjusted to some greater or lesser degree; and they definitely never mention the fact that the practical result of the so-called “homogenization” process is significant downward adjustments in earlier-year temperatures. So, without the adjustments, is there a real underlying warming trend? Or is the whole “warming” thing just an artifact of the adjustments? And are the adjustments as actually implemented fair or not? How do you know that they are not using the adjustment process to reverse-engineer the warming trend that they need to keep the “climate change” gravy train going?

Making things far worse is that the adjusters do not disclose the details of the methodology of their adjustments. Here is what they say about their methodology on the NOAA web page:

In GHCNm v4, shifts in monthly temperature series are detected through automated pairwise comparisons of the station series using the algorithm described in Menne and Williams (2009). This procedure, known as the Pairwise Homogenization Algorithm (PHA), systematically evaluates each time series of monthly average surface air temperature to identify cases in which there is an abrupt shift in one station’s temperature series (the “target” series) relative to many other correlated series from other stations in the region (the “reference” series). The algorithm seeks to resolve the timing of shifts for all station series before computing an adjustment factor to compensate for any one particular shift. These adjustment factors are based on the average change in the magnitude of monthly temperature differences between the target station series with the apparent shift and the reference series with no apparent concurrent shifts.

Not very enlightening.

Anyway, a guy named Tony Heller, who runs a web site called The Deplorable Climate Science Blog, has just come out with a video that explains very simply and graphically how this “homogenization” process is used to lower early-year temperatures and thus create artificial warming trends. The video is only about 8 minutes long, and well worth your time:

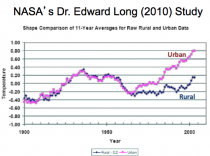

The video focuses on the region of Buenos Aires, Argentina, to demonstrate the process. In that area, there are only three temperature stations with long-term records going back as far as the early 1900s. One is called the Buenos Aires Observatory, and is located right in the middle of downtown Buenos Aires. Obviously, this station has been subject to substantial warming caused by what is known as the “urban heat island” - the buildup of asphalt and concrete and air conditioning and heating and so forth in the area immediately surrounding the thermometer. The other two sites - Mercedes and Rocha - are located a some distance from town in areas far from urban buildup.

Heller shows both originally-reported and adjusted data for each of the three sites. It won’t surprise you. The Buenos Aires observatory site shows strong warming in the originally-reported data. The other two sites show no warming in the originally-reported data. The “homogenization” changes have adjusted all the thermometers to reflect a common trend of warming. For downtown Buenos Aires, the changes have somewhat reduced the originally-reported warming. For the other two stations, the changes have introduced major warming trends that did not exist at all in the originally-reported data. The trend has been inserted without changing the ongoing reporting of current data, which inherently means that the way the trend has been introduced has been by reducing the earlier-year temperatures.

In short, the bad data from downtown Buenos Aires has been used to contaminate the good data from the other two sites, and to create a false warming trend.

Is there anything honest about this? In my opinion, there is no possibility that the people who program the “homogenization” adjustments do not know exactly what the result of their adjustments will be in the temperature trends as reported to the public. In other words, it is an intentional deception. Heller asserts that the data from downtown Buenos Aires is obviously tainted by the urban heat island effect, and that the correct thing to do (if you were actually trying to come up with a temperature series to detect atmospheric warming) would be to discard the Buenos Aires data and use the unadjusted readings from the other two stations. I have to say that I agree with him.

Joseph D’Aleo, CCM

The U.S. Climate Reference Network (USCRN) is a systematic and sustained network of climate monitoring stations with sites across the conterminous U.S., Alaska, and Hawaii. These stations use high-quality instruments to measure temperature, precipitation, wind speed, soil conditions, and more. Information is available on what is measured and the USCRN station instruments.

The vision of the USCRN program is to provide a continuous (more accurate) series of climate observations for monitoring trends in the nation’s climate and supporting climate-impact research.

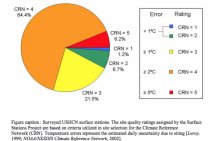

The Surface Stations project found over 90% of long term stations had siting issues that would produce a warm bias of >= 1C. UHI was also a factor.

USHCN surveyed 7-14-09 Enlarged.

According to GAO’s survey of weather forecast offices, about 42 percent of the active stations in 2010 did not meet one or more of the siting standards and were especially egregious and required changes. They did not consider UHI.

The CRN was established based on the work of John Christy. Tom Karl tried to get funding for a complete network but was told by NOAA, the satellites were the future and they refused to fund the complete replacement though some additions were made. The current network has 137 stations (up from 114). By definition they provide proper siting and are not UHI contaminated.

Here is the CRN network today:

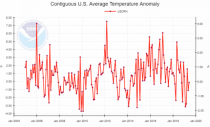

Here is a plot of monthly average anomalies since 2004 in CRN. Hmmm.